Table of Contents

- 1 Introduction

- 2 Why Terraform for Infrastructure as Code?

- 3 Getting Started with Terraform

- 4 Advanced Terraform Basics: Modules, State, and Provisioners

- 5 FAQs About Terraform and Infrastructure as Code

- 5.1 What is Infrastructure as Code (IaC)?

- 5.2 What are the benefits of using Terraform for IaC?

- 5.3 Can Terraform work with multiple cloud providers?

- 5.4 Is Terraform only used for cloud infrastructure?

- 5.5 How does Terraform handle infrastructure drift?

- 5.6 Can I use Terraform for serverless applications?

- 6 External Links for Further Learning

- 7 Conclusion

Introduction

In today’s digital world, managing cloud infrastructure efficiently and consistently is a challenge that many companies face. Terraform, an open-source tool by HashiCorp, is revolutionizing this task by providing a way to define, provision, and manage infrastructure with code. Known as Infrastructure as Code (IaC), this approach offers significant advantages, including version control, reusable templates, and consistent configurations. This article will walk you through Terraform basics for Infrastructure as Code, highlighting key commands, examples, and best practices to get you started.

Why Terraform for Infrastructure as Code?

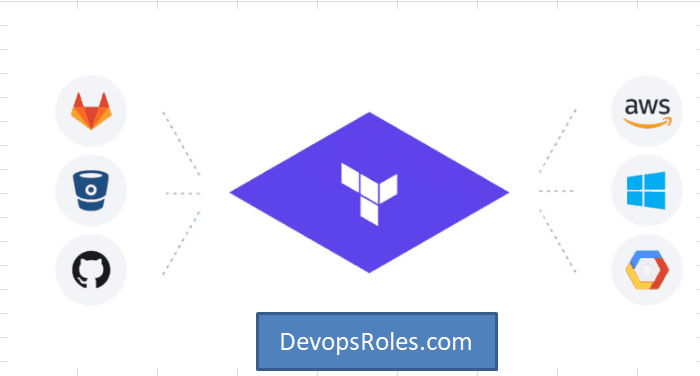

Terraform enables DevOps engineers, system administrators, and developers to write declarative configuration files to manage and deploy infrastructure across multiple cloud providers. Whether you’re working with AWS, Azure, Google Cloud, or a hybrid environment, Terraform’s simplicity and flexibility make it a top choice. Below, we’ll explore how to set up and use Terraform, starting from the basics and moving to more advanced concepts.

Getting Started with Terraform

Prerequisites

Before diving into Terraform, ensure you have:

- A basic understanding of cloud services.

- Terraform installed on your machine. You can download it from the official Terraform website.

Setting Up a Terraform Project

Create a Directory: Start by creating a directory for your Terraform project.

mkdir terraform_project

cd terraform_projectCreate a Configuration File: Terraform uses configuration files written in HashiCorp Configuration Language (HCL), usually saved with a .tf extension.

provider "aws" {

region = "us-west-2"

}

resource "aws_instance" "example" {

ami = "ami-0c55b159cbfafe1f0"

instance_type = "t2.micro"

}

Initialize Terraform: Run terraform init to initialize your project. This command installs the required provider plugins.

terraform init

Writing Terraform Configuration Files

A Terraform configuration file typically has the following elements:

- Provider Block: Defines the cloud provider (AWS, Azure, Google Cloud, etc.).

- Resource Block: Specifies the infrastructure resource (e.g., an EC2 instance in AWS).

- Variables Block: Allows dynamic values that make the configuration flexible.

Here’s an example configuration file for launching an AWS EC2 instance:

provider "aws" {

region = var.region

}

variable "region" {

default = "us-east-1"

}

resource "aws_instance" "web" {

ami = "ami-0c55b159cbfafe1f0"

instance_type = "t2.micro"

tags = {

Name = "ExampleInstance"

}

}

Executing Terraform Commands

- Initialize the project:

terraform init

- Plan the changes:

terraform plan

- Apply the configuration:

terraform apply

These commands make it easy to understand what changes Terraform will make before committing to them.

Advanced Terraform Basics: Modules, State, and Provisioners

Terraform Modules

Modules are reusable pieces of Terraform code that help you organize and manage complex infrastructure. By creating a module, you can apply the same configuration across different environments or projects with minimal modifications.

Example: Creating and Using a Module

Create a Module Directory:

mkdir -p modules/aws_instance

Define the Module Configuration: Inside modules/aws_instance/main.tf:

resource "aws_instance" "my_instance" {

ami = var.ami

instance_type = var.instance_type

}

variable "ami" {}

variable "instance_type" {}

Use the Module in Main Configuration:

module "web_server" {

source = "./modules/aws_instance"

ami = "ami-0c55b159cbfafe1f0"

instance_type = "t2.micro"

}

Modules promote code reuse and consistency across projects.

Terraform State Management

Terraform keeps track of your infrastructure’s current state in a state file. Managing state is crucial for accurate infrastructure deployment. Use terraform state commands to manage state files and ensure infrastructure alignment.

Best Practices for State Management:

- Store State Remotely: Use remote backends like S3 or Azure Blob Storage for enhanced collaboration and safety.

- Use State Locking: This prevents conflicting updates by locking the state during updates.

Using Provisioners for Post-Deployment Configuration

Provisioners in Terraform allow you to perform additional setup after resource creation, such as installing software or configuring services.

Example: Provisioning an EC2 Instance:

resource "aws_instance" "web" {

ami = "ami-0c55b159cbfafe1f0"

instance_type = "t2.micro"

provisioner "remote-exec" {

inline = [

"sudo apt-get update -y",

"sudo apt-get install -y nginx"

]

}

}

FAQs About Terraform and Infrastructure as Code

What is Infrastructure as Code (IaC)?

Infrastructure as Code (IaC) allows you to manage and provision infrastructure through code, providing a consistent environment and reducing manual efforts.

What are the benefits of using Terraform for IaC?

Terraform offers multiple benefits, including multi-cloud support, reusable configurations, version control, and easy rollback.

Can Terraform work with multiple cloud providers?

Yes, Terraform supports a range of cloud providers like AWS, Azure, and Google Cloud, making it highly versatile for various infrastructures.

Is Terraform only used for cloud infrastructure?

No, Terraform can also provision on-premises infrastructure through providers like VMware and custom providers.

How does Terraform handle infrastructure drift?

Terraform compares the state file with actual infrastructure. If any drift is detected, it updates the resources to match the configuration or reports the difference.

Can I use Terraform for serverless applications?

Yes, you can use Terraform to manage serverless infrastructure, including Lambda functions on AWS, using specific resource definitions.

External Links for Further Learning

Conclusion

Mastering Terraform basics for Infrastructure as Code can elevate your cloud management capabilities by making your infrastructure more scalable, reliable, and reproducible. From creating configuration files to managing complex modules and state files, Terraform provides the tools you need for efficient infrastructure management. Embrace these basics, and you’ll be well on your way to harnessing the full potential of Infrastructure as Code with Terraform. Thank you for reading the DevopsRoles page!