Table of Contents

Introduction

In the modern world of DevOps and infrastructure automation, tools like Ansible and Terraform are essential for simplifying the process of provisioning, configuring, and managing infrastructure. However, while both of these tools share similarities in automating IT tasks, they are designed for different purposes and excel in different areas. Understanding the key differences between Ansible vs Terraform can help you make the right choice for your infrastructure management needs.

This article will explore the main distinctions between Ansible and Terraform, their use cases, and provide real-world examples to guide your decision-making process.

Ansible vs Terraform: What They Are

What is Ansible?

Ansible is an open-source IT automation tool that is primarily used for configuration management, application deployment, and task automation. Developed by Red Hat, Ansible uses playbooks written in YAML to automate tasks across various systems. It’s agentless, meaning it doesn’t require any agents to be installed on the target machines, making it simple to deploy.

Some of the key features of Ansible include:

- Automation of tasks: Like installing packages, configuring software, or ensuring servers are up-to-date.

- Ease of use: YAML syntax is simple and human-readable.

- Agentless architecture: Ansible uses SSH or WinRM for communication, eliminating the need for additional agents on the target machines.

What is Terraform?

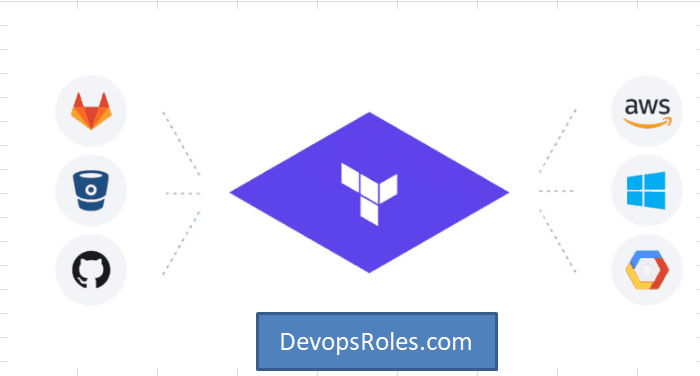

Terraform, developed by HashiCorp, is a powerful Infrastructure as Code (IaC) tool used for provisioning and managing cloud infrastructure. Unlike Ansible, which focuses on configuration management, Terraform is specifically designed to manage infrastructure resources such as virtual machines, storage, and networking components in a declarative manner.

Key features of Terraform include:

- Declarative configuration: Users describe the desired state of the infrastructure in configuration files, and Terraform automatically ensures that the infrastructure matches the specified state.

- Cross-cloud compatibility: Terraform supports multiple cloud providers like AWS, Azure, Google Cloud, and others.

- State management: Terraform maintains a state file that tracks the current state of your infrastructure.

Ansible vs Terraform: Key Differences

1. Configuration Management vs Infrastructure Provisioning

The core distinction between Ansible and Terraform lies in their primary function:

- Ansible is mainly focused on configuration management. It allows you to automate the setup and configuration of software and services on machines once they are provisioned.

- Terraform, on the other hand, is an Infrastructure as Code (IaC) tool, focused on provisioning infrastructure. It allows you to create, modify, and version control cloud resources like servers, storage, networks, and more.

In simple terms, Terraform manages the “infrastructure”, while Ansible handles the “configuration” of that infrastructure.

2. Approach: Declarative vs Imperative

Another significant difference lies in the way both tools approach automation:

Terraform uses a declarative approach, where you define the desired end state of your infrastructure. Terraform will figure out the steps required to reach that state and will apply those changes automatically.

Example (Terraform):

resource "aws_instance" "example" {

ami = "ami-12345678"

instance_type = "t2.micro"

}

Here, you’re declaring that you want an AWS instance with a specific AMI and instance type. Terraform handles the details of how to achieve that state.

Ansible, on the other hand, uses an imperative approach, where you explicitly define the sequence of actions that need to be executed.

Example (Ansible):

- name: Install Apache web server

apt:

name: apache2

state: present

3. State Management

State management is a crucial aspect of IaC, and it differs greatly between Ansible and Terraform:

- Terraform keeps track of the state of your infrastructure using a state file. This file contains information about your resources and their configurations, allowing Terraform to manage and update your infrastructure in an accurate and efficient way.

- Ansible does not use a state file. It runs tasks on the target systems and doesn’t retain any state between runs. This means it doesn’t have an internal understanding of your infrastructure’s current state.

4. Ecosystem and Integrations

Both tools offer robust ecosystems and integrations but in different ways:

- Ansible has a wide range of modules that allow it to interact with various cloud providers, servers, and other systems. It excels at configuration management, orchestration, and even application deployment.

- Terraform specializes in infrastructure provisioning and integrates with multiple cloud providers through plugins (known as providers). Its ecosystem is tightly focused on managing resources across cloud platforms.

Use Cases of Ansible and Terraform

When to Use Ansible

Ansible is ideal when you need to:

- Automate server configuration and software deployment.

- Manage post-provisioning tasks such as setting up applications or configuring services on VMs.

- Automate system-level tasks like patching, security updates, and network configurations.

When to Use Terraform

Terraform is best suited for:

- Managing cloud infrastructure resources (e.g., creating VMs, networks, load balancers).

- Handling infrastructure versioning, scaling, and resource management across different cloud platforms.

- Managing complex infrastructures and dependencies in a repeatable, predictable manner.

Example Scenarios: Ansible vs Terraform

Scenario 1: Provisioning Infrastructure

If you want to create a new virtual machine in AWS, Terraform is the best tool to use since it’s designed specifically for infrastructure provisioning.

Terraform Example:

resource "aws_instance" "web" {

ami = "ami-abc12345"

instance_type = "t2.micro"

}

Once the infrastructure is provisioned, you would use Ansible to configure the machine (install web servers, deploy applications, etc.).

Scenario 2: Configuring Servers

Once your infrastructure is provisioned using Terraform, Ansible can be used to configure and manage the software installed on your servers.

Ansible Example:

- name: Install Apache web server

apt:

name: apache2

state: present

FAQ: Ansible vs Terraform

1. Can Ansible be used for Infrastructure as Code (IaC)?

Yes, Ansible can be used for Infrastructure as Code, but it is primarily focused on configuration management. While it can manage cloud resources, Terraform is more specialized for infrastructure provisioning.

2. Can Terraform be used for Configuration Management?

Terraform is not designed for configuration management. However, it can handle some simple tasks, but it’s more suited for provisioning infrastructure.

3. Which one is easier to learn: Ansible or Terraform?

Ansible is generally easier for beginners to learn because it uses YAML, which is a simple, human-readable format. Terraform, while also relatively easy, requires understanding of HCL (HashiCorp Configuration Language) and is more focused on infrastructure provisioning.

4. Can Ansible and Terraform be used together?

Yes, Ansible and Terraform are often used together. Terraform can handle infrastructure provisioning, while Ansible is used for configuring and managing the software and services on those provisioned resources.

Conclusion

Ansible vs Terraform ultimately depends on your specific use case. Ansible is excellent for configuration management and automation of tasks on existing infrastructure, while Terraform excels in provisioning and managing cloud infrastructure. By understanding the key differences between these two tools, you can decide which best fits your needs or how to use them together to streamline your DevOps processes.

For more detailed information on Terraform and Ansible, check out these authoritative resources:

Both tools play an integral role in modern infrastructure management and DevOps practices, making them essential for cloud-first organizations and enterprises managing large-scale systems. Thank you for reading the DevopsRoles page!