Managing and scaling cloud infrastructure efficiently is paramount for modern businesses. A crucial component of many cloud architectures is robust, scalable storage, and AWS FSx for NetApp ONTAP provides a compelling solution. However, manually managing the deployment and lifecycle of FSx for NetApp ONTAP can be time-consuming and error-prone. This is where Infrastructure as Code (IaC) tools like Terraform come in. This comprehensive guide will walk you through deploying FSx for NetApp ONTAP using Terraform, demonstrating best practices and addressing common challenges along the way. We will cover everything from basic deployments to more advanced configurations, enabling you to efficiently manage your FSx for NetApp ONTAP file systems.

Understanding the Benefits of Terraform for FSx for NetApp ONTAP

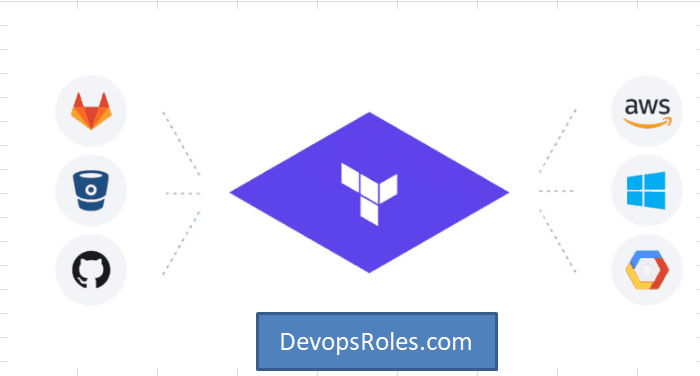

Terraform, a powerful IaC tool from HashiCorp, allows you to define and provision your infrastructure in a declarative manner. This means you describe the desired state of your FSx for NetApp ONTAP file system, and Terraform manages the process of creating, updating, and deleting it. This approach offers several key advantages:

- Automation: Automate the entire deployment process, eliminating manual steps and reducing the risk of human error.

- Consistency: Ensure consistent deployments across different environments (development, testing, production).

- Version Control: Track changes to your infrastructure as code using Git or other version control systems.

- Collaboration: Facilitate collaboration among team members by having a single source of truth for your infrastructure.

- Infrastructure as Code (IaC): Treat your infrastructure as code, making it manageable, repeatable and testable.

Setting up Your Environment for Terraform and FSx for NetApp ONTAP

Before you begin, ensure you have the following prerequisites:

- AWS Account: An active AWS account with appropriate permissions to create and manage resources.

- Terraform Installed: Download and install Terraform from the official HashiCorp website. https://www.terraform.io/downloads.html

- AWS CLI Installed and Configured: Configure the AWS CLI with your credentials to interact with AWS services.

- An IAM Role with Sufficient Permissions: The role used by Terraform needs permissions to create and manage FSx for NetApp ONTAP resources.

Creating a Basic Terraform Configuration

Let’s start with a simple Terraform configuration to create a basic FSx for NetApp ONTAP file system. This example uses a small volume size for demonstration; adjust accordingly for production environments.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.0"

}

}

}

provider "aws" {

region = "us-west-2" # Replace with your desired region

}

resource "aws_fsx_ontap_file_system" "example" {

storage_capacity = 1024 # In GB

subnet_ids = ["subnet-xxxxxxxxxxxxxxxxx", "subnet-yyyyyyyyyyyyyyyyy"] # Replace with your subnet IDs

kms_key_id = "alias/aws/fsx" # Optional KMS key ID

throughput_capacity = 100 # Example throughput

file_system_type = "ONTAP"

}This configuration defines a provider for AWS, specifies the region, and creates an FSx for NetApp ONTAP file system with a storage capacity of 1TB and two subnet IDs. Remember to replace placeholders like subnet IDs with your actual values.

Advanced Configurations with Terraform and FSx for NetApp ONTAP

Building upon the basic configuration, let’s explore more advanced features and options offered by Terraform and FSx for NetApp ONTAP.

Using Security Groups

For enhanced security, associate a security group with your FSx for NetApp ONTAP file system. This controls inbound and outbound network traffic.

resource "aws_security_group" "fsx_sg" {

name = "fsx-security-group"

description = "Security group for FSx for NetApp ONTAP"

ingress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"] # Restrict this in production!

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"] # Restrict this in production!

}

}

resource "aws_fsx_ontap_file_system" "example" {

# ... other configurations ...

security_group_ids = [aws_security_group.fsx_sg.id]

}Managing Snapshots

Regularly creating snapshots of your FSx for NetApp ONTAP file system is crucial for data protection and disaster recovery. Terraform can automate this process.

resource "aws_fsx_ontap_snapshot" "example" {

file_system_id = aws_fsx_ontap_file_system.example.id

name = "my-snapshot"

}Working with Volume Backups

For improved resilience, configure volume backups for your FSx for NetApp ONTAP file system. This allows restoring individual volumes.

This requires more detailed configuration within the FSx for NetApp ONTAP system itself after deployment and is beyond the scope of a simple Terraform configuration snippet, but it’s a crucial aspect of managing the system’s data resilience.

Implementing lifecycle management

Terraform allows you to control the entire lifecycle of your FSx for NetApp ONTAP infrastructure. You can destroy the file system using `terraform destroy`.

Deploying and Managing Your FSx for NetApp ONTAP Infrastructure

- Initialize Terraform: Run

terraform initto download the necessary providers. - Plan the Deployment: Run

terraform planto see what changes Terraform will make. - Apply the Changes: Run

terraform applyto create the FSx for NetApp ONTAP file system. - Monitor the Deployment: After applying the configuration, monitor the AWS Management Console to ensure the FSx for NetApp ONTAP file system is created successfully.

- Manage and Update: Use

terraform applyto update your configuration as needed. - Destroy the Infrastructure: Use

terraform destroyto delete the FSx for NetApp ONTAP file system when it’s no longer needed.

Frequently Asked Questions

Q1: What are the pricing considerations for using FSx for NetApp ONTAP?

AWS FSx for NetApp ONTAP pricing is based on several factors, including storage capacity, throughput, and operational costs. The AWS pricing calculator is your best resource to estimate costs based on your specific needs. It’s important to consider factors like data transfer costs as well as the ongoing costs of storage. Refer to the official AWS documentation for the most up-to-date pricing information.

Q2: How can I manage access control to my FSx for NetApp ONTAP file system?

Access control is managed through the NetApp ONTAP management interface, which integrates with your existing Active Directory or other identity providers. You can manage user permissions and quotas through this interface, ensuring only authorized users have access to your data.

Q3: Can I use Terraform to manage multiple FSx for NetApp ONTAP file systems?

Yes, you can use Terraform to manage multiple FSx for NetApp ONTAP file systems within the same configuration, using resource blocks to define different systems with unique names, configurations, and settings.

Q4: What are the limitations of using Terraform with FSx for NetApp ONTAP?

While Terraform simplifies deployment and management, it doesn’t manage all aspects of FSx for NetApp ONTAP. Fine-grained configuration options within the ONTAP system itself still need to be managed through the ONTAP management interface. Additionally, complex networking setups might require additional configurations outside the scope of this basic Terraform configuration.

Conclusion

In conclusion, deploying AWS FSx for NetApp ONTAP with Terraform offers a robust and efficient approach to managing your file storage infrastructure. By leveraging Infrastructure as Code (IaC) principles, you gain unparalleled benefits in terms of automation, consistency, version control, and collaborative development.

This comprehensive guide has walked you through the essential steps, from initial setup and basic configurations to advanced features like security groups and snapshot management. You now possess the knowledge to confidently initialize, plan, apply, and manage your FSx for NetApp ONTAP deployments, ensuring your storage resources are provisioned and maintained with precision and scalability. Embracing Terraform for this critical task not only streamlines your DevOps workflows but also empowers your teams to build and manage highly reliable and resilient cloud environments. Thank you for reading the DevopsRoles page!