For DevOps engineers, cloud architects, and anyone managing containerized applications, the ability to automate infrastructure provisioning is paramount. Red Hat OpenShift (ROSA), a leading Kubernetes platform, combined with Terraform, a powerful Infrastructure as Code (IaC) tool, offers a streamlined and repeatable method for building and managing clusters. This guide delves into the process of Build ROSA clusters with Terraform, providing a comprehensive walkthrough for both beginners and experienced users. We’ll explore various use cases, best practices, and troubleshooting techniques to ensure you can effectively leverage this powerful combination.

Understanding the Power of ROSA and Terraform

Red Hat OpenShift (ROSA) provides a robust and secure platform for deploying and managing containerized applications. Its enterprise-grade features, including built-in security, high availability, and robust management tools, make it a preferred choice for mission-critical applications. However, manually setting up and managing ROSA clusters can be time-consuming and error-prone.

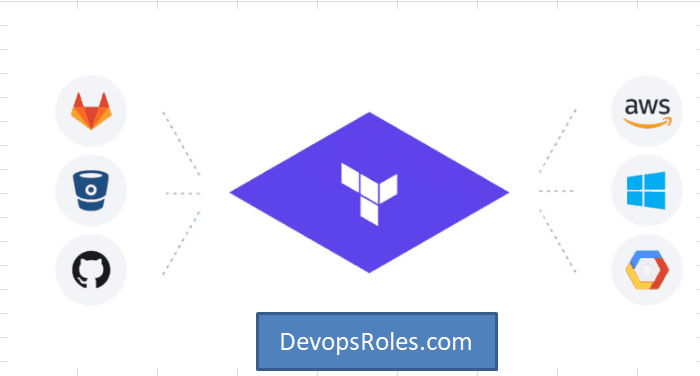

Terraform, an open-source IaC tool, allows you to define and manage your infrastructure in a declarative manner. Using code, you describe the desired state of your ROSA cluster, and Terraform ensures it’s provisioned and maintained according to your specifications. This eliminates manual configuration, promotes consistency, and facilitates version control, making it ideal for managing complex infrastructure like ROSA clusters.

Setting up Your Environment for Build ROSA Clusters with Terraform

Prerequisites

- A cloud provider account: AWS, Azure, or GCP are commonly used. This guide will use AWS as an example.

- Terraform installed: Download and install Terraform from the official website: https://www.terraform.io/downloads.html

- AWS credentials configured: Ensure your AWS credentials are configured correctly using AWS CLI or environment variables.

- ROSA account and credentials: You’ll need a Red Hat account with access to ROSA.

- A text editor or IDE: To write your Terraform configuration files.

Creating Your Terraform Configuration

The core of building your ROSA cluster with Terraform lies in your configuration files (typically named main.tf). These files define the resources you want to create, including the virtual machines, networks, and the OpenShift cluster itself. A basic structure might look like this (note: this is a simplified example and requires further customization based on your specific needs):

# main.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.0"

}

}

}

provider "aws" {

region = "us-east-1" # Replace with your desired region

}

resource "aws_instance" "master" {

# ... (Master node configurations) ...

}

resource "aws_instance" "worker" {

# ... (Worker node configurations) ...

}

# ... (Further configurations for networking, security groups, etc.) ...

resource "random_id" "cluster_id" {

byte_length = 8

}

resource "null_resource" "rosa_install" {

provisioner "local-exec" {

command = "rosa create cluster ${random_id.cluster_id.hex} --pull-secret-path --aws-region us-east-1" #Replace placeholders with appropriate values.

}

depends_on = [aws_instance.master, aws_instance.worker]

}

Important Note: Replace placeholders like `` with your actual values. The rosa create cluster command requires specific parameters, including your pull secret (obtained from your Red Hat account). This example uses a `null_resource` and a `local-exec` provisioner for simplicity. For production, consider more robust methods such as using the `rosacli` executable directly within Terraform.

Advanced Scenarios and Customization

Multi-AZ Deployments for High Availability

For enhanced high availability, you can configure your Terraform code to deploy ROSA across multiple Availability Zones (AZs). This ensures redundancy and minimizes downtime in case of AZ failures. This would involve creating multiple instances in different AZs and configuring appropriate networking to enable inter-AZ communication.

Integrating with Other Services

Terraform allows for seamless integration with other AWS services. You can easily provision resources like load balancers, databases (e.g., RDS), and storage (e.g., S3) alongside your ROSA cluster. This provides a comprehensive, automated infrastructure for your applications.

Using Terraform Modules for Reusability

For large-scale deployments or to promote code reusability, you can create Terraform modules. A module encapsulates a set of resources that can be reused across different projects. This improves maintainability and reduces redundancy in your code.

Implementing CI/CD with Terraform

By integrating Terraform with CI/CD pipelines (e.g., Jenkins, GitLab CI, GitHub Actions), you can automate the entire process of creating and managing ROSA clusters. Changes to your Terraform code can automatically trigger the provisioning or updates to your cluster, ensuring that your infrastructure remains consistent with your code.

Real-World Examples and Use Cases

Scenario 1: Deploying a Simple Application

A DevOps team wants to quickly deploy a simple web application on ROSA. Using Terraform, they can automate the creation of the cluster, configure networking, and deploy the application through a pipeline. This eliminates manual steps and ensures consistent deployment across environments.

Scenario 2: Setting Up a Database Cluster

A DBA needs to provision a highly available database cluster to support a mission-critical application deployed on ROSA. Terraform can automate the setup of the database (e.g., using RDS on AWS), configure network access, and integrate it with the ROSA cluster, creating a seamless and manageable infrastructure.

Scenario 3: Building a Machine Learning Platform

An AI/ML engineer needs to create a scalable platform for training and deploying machine learning models. Terraform can provision the necessary compute resources (e.g., high-performance instances), configure networking, and create the ROSA cluster to host the AI/ML applications and services. This allows for efficient resource utilization and scaling.

Frequently Asked Questions (FAQ)

Q1: What are the benefits of using Terraform to build ROSA clusters?

Using Terraform offers several key benefits: Automation (reduced manual effort), Consistency (repeatable deployments), Version Control (track changes and revert if needed), Collaboration (easier teamwork), and Scalability (easily manage large clusters).

Q2: How do I handle secrets and sensitive information in my Terraform code?

Avoid hardcoding secrets directly into your Terraform code. Use secure methods like environment variables, HashiCorp Vault, or AWS Secrets Manager to store and manage sensitive information. Terraform supports these integrations, allowing you to securely access these secrets during the provisioning process.

Q3: What are some common troubleshooting steps when using Terraform with ROSA?

Check your Terraform configuration for syntax errors. Verify your AWS credentials and ROSA credentials. Ensure network connectivity between your resources. Examine the Terraform logs for error messages. Consult the ROSA and Terraform documentation for solutions to specific problems. The `terraform validate` and `terraform plan` commands are crucial for identifying issues before applying changes.

Q4: How can I update an existing ROSA cluster managed by Terraform?

To update an existing cluster, you’ll need to modify your Terraform configuration to reflect the desired changes. Run `terraform plan` to see the planned changes and `terraform apply` to execute them. Terraform will efficiently update only the necessary resources. Be mindful of potential downtime during updates, especially for changes affecting core cluster components.

Q5: What are the security considerations when using Terraform to manage ROSA?

Security is paramount. Use appropriate security groups and IAM roles to restrict access to your resources. Regularly update Terraform and its provider plugins to benefit from the latest security patches. Implement proper access controls and utilize secrets management solutions as described above. Always review the `terraform plan` output before applying any.

Conclusion

Building ROSA (Red Hat OpenShift Service on AWS) clusters with Terraform offers a robust, automated, and repeatable approach to managing cloud-native infrastructure. By leveraging Terraform’s Infrastructure as Code (IaC) capabilities, organizations can streamline the deployment process, enforce consistency across environments, and reduce human error. This method not only accelerates cluster provisioning but also enhances scalability, governance, and operational efficiency — making it an ideal solution for enterprises aiming to integrate OpenShift into their AWS ecosystem in a secure and maintainable way. Thank you for reading the DevopsRoles page!