In today’s dynamic cloud landscape, organizations are constantly seeking ways to accelerate innovation while maintaining stringent governance, compliance, and cost control. As enterprises scale their adoption of AWS, the challenge of standardizing infrastructure provisioning, ensuring adherence to best practices, and empowering development teams with self-service capabilities becomes increasingly complex. This is where the synergy between AWS Service Catalog and Terraform Cloud shines, offering a powerful solution to streamline cloud resource deployment and enforce organizational policies.

This in-depth guide will explore how to master AWS Service Catalog integration with Terraform Cloud, providing you with the knowledge and practical steps to build a robust, governed, and automated cloud provisioning framework. We’ll delve into the core concepts, demonstrate practical implementation with code examples, and uncover advanced strategies to elevate your cloud infrastructure management.

Table of Contents

- 1 Understanding AWS Service Catalog: The Foundation of Governed Self-Service

- 2 Introduction to Terraform Cloud

- 3 The Synergistic Benefits: AWS Service Catalog and Terraform Cloud

- 4 Prerequisites and Setup

- 5 Step-by-Step Implementation: Creating a Simple Product

- 6 Advanced Scenarios and Best Practices

- 7 Troubleshooting Common Issues

- 8 Frequently Asked Questions

- 8.1 What is the difference between AWS Service Catalog and AWS CloudFormation?

- 8.2 Can I use Terraform Open Source directly with AWS Service Catalog without Terraform Cloud?

- 8.3 How does AWS Service Catalog handle updates to provisioned products?

- 8.4 What are the security best practices when integrating Service Catalog with Terraform Cloud?

Understanding AWS Service Catalog: The Foundation of Governed Self-Service

What is AWS Service Catalog?

AWS Service Catalog is a service that allows organizations to create and manage catalogs of IT services that are approved for use on AWS. These IT services can include everything from virtual machine images, servers, software, databases, and complete multi-tier application architectures. Service Catalog helps organizations achieve centralized governance and ensure compliance with corporate standards while enabling users to quickly deploy only the pre-approved IT services they need.

The primary problems AWS Service Catalog solves include:

- Governance: Ensures that only approved AWS resources and architectures are provisioned.

- Compliance: Helps meet regulatory and security requirements by enforcing specific configurations.

- Self-Service: Empowers end-users (developers, data scientists) to provision resources without direct intervention from central IT.

- Standardization: Promotes consistency in deployments across teams and projects.

- Cost Control: Prevents the provisioning of unapproved, potentially costly resources.

Key Components of AWS Service Catalog

To effectively utilize AWS Service Catalog, it’s crucial to understand its core components:

- Products: A product is an IT service that you want to make available to end-users. It can be a single EC2 instance, a configured RDS database, or a complex application stack. Products are defined by a template, typically an AWS CloudFormation template, but crucially for this article, they can also be defined by Terraform configurations.

- Portfolios: A portfolio is a collection of products. It allows you to organize products, control access to them, and apply constraints to ensure proper usage. For example, you might have separate portfolios for “Development,” “Production,” or “Data Science” teams.

- Constraints: Constraints define how end-users can deploy a product. They can be of several types:

- Launch Constraints: Specify an IAM role that AWS Service Catalog assumes to launch the product. This decouples the end-user’s permissions from the permissions required to provision the resources, enabling least privilege.

- Template Constraints: Apply additional rules or modifications to the underlying template during provisioning, ensuring compliance (e.g., specific instance types allowed).

- TagOption Constraints: Automate the application of tags to provisioned resources, aiding in cost allocation and resource management.

- Provisioned Products: An instance of a product that an end-user has launched.

Introduction to Terraform Cloud

What is Terraform Cloud?

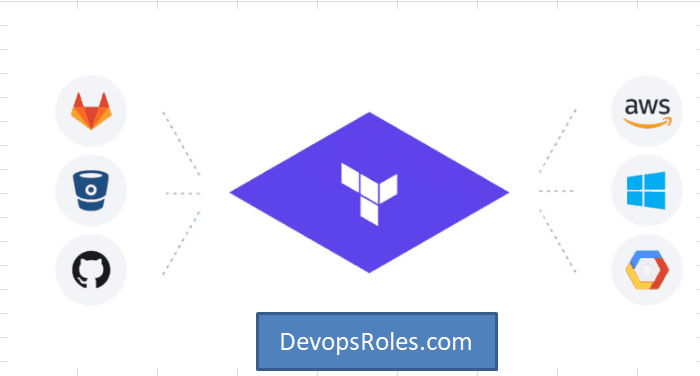

Terraform Cloud is a managed service offered by HashiCorp that provides a collaborative platform for infrastructure as code (IaC) using Terraform. While open-source Terraform excels at provisioning and managing infrastructure, Terraform Cloud extends its capabilities with a suite of features designed for team collaboration, governance, and automation in production environments.

Key features of Terraform Cloud include:

- Remote State Management: Securely stores and manages Terraform state files, preventing concurrency issues and accidental deletions.

- Remote Operations: Executes Terraform runs remotely, reducing the need for local installations and ensuring consistent environments.

- Version Control System (VCS) Integration: Automatically triggers Terraform runs on code changes in integrated VCS repositories (GitHub, GitLab, Bitbucket, Azure DevOps).

- Team & Governance Features: Provides role-based access control (RBAC), policy as code (Sentinel), and cost estimation tools.

- Private Module Registry: Allows organizations to share and reuse Terraform modules internally.

- API-Driven Workflow: Enables programmatic interaction and integration with CI/CD pipelines.

Why Terraform for AWS Service Catalog?

Traditionally, AWS Service Catalog relied heavily on CloudFormation templates for defining products. While CloudFormation is powerful, Terraform offers several advantages that make it an excellent choice for defining AWS Service Catalog products, especially for organizations already invested in the Terraform ecosystem:

- Multi-Cloud/Hybrid Cloud Consistency: Terraform’s provider model supports various cloud providers, allowing a consistent IaC approach across different environments if needed.

- Mature Ecosystem: A vast community, rich module ecosystem, and strong tooling support.

- Declarative and Idempotent: Ensures that your infrastructure configuration matches the desired state, making deployments predictable.

- State Management: Terraform’s state file precisely maps real-world resources to your configuration.

- Advanced Resource Management: Offers powerful features like `count`, `for_each`, and data sources that can simplify complex configurations.

Using Terraform Cloud further enhances this by providing a centralized, secure, and collaborative environment to manage these Terraform-defined Service Catalog products.

The Synergistic Benefits: AWS Service Catalog and Terraform Cloud

Combining AWS Service Catalog with Terraform Cloud creates a powerful synergy that addresses many challenges in modern cloud infrastructure management:

Enhanced Governance and Compliance

- Policy as Code (Sentinel): Terraform Cloud’s Sentinel policies can enforce pre-provisioning checks, ensuring that proposed infrastructure changes comply with organizational security, cost, and operational standards before they are even submitted to Service Catalog.

- Launch Constraints: Service Catalog’s launch constraints ensure that products are provisioned with specific, high-privileged IAM roles, while end-users only need permission to launch the product, adhering to the principle of least privilege.

- Standardized Modules: Using private Terraform modules in Terraform Cloud ensures that all Service Catalog products are built upon approved, audited, and version-controlled infrastructure patterns.

Standardized Provisioning and Self-Service

- Consistent Deployments: Terraform’s declarative nature, managed by Terraform Cloud, ensures that every time a user provisions a product, it’s deployed consistently according to the defined template.

- Developer Empowerment: Developers and other end-users can provision their required infrastructure through a user-friendly Service Catalog interface, without needing deep AWS or Terraform expertise.

- Version Control: Terraform Cloud’s VCS integration means that all infrastructure definitions are versioned, auditable, and easily revertible.

Accelerated Deployment and Reduced Operational Overhead

- Automation: Automated Terraform runs via Terraform Cloud eliminate manual steps, speeding up the provisioning process.

- Reduced Rework: Standardized products reduce the need for central IT to manually configure resources for individual teams.

- Auditing and Transparency: Terraform Cloud provides detailed logs of all runs, and AWS Service Catalog tracks who launched which product, offering complete transparency.

Prerequisites and Setup

Before diving into implementation, ensure you have the following:

AWS Account Configuration

- An active AWS account with administrative access for initial setup.

- An IAM user or role with permissions to create and manage AWS Service Catalog resources (

servicecatalog:*), IAM roles, S3 buckets, and any other resources your products will provision. It’s recommended to follow the principle of least privilege.

Terraform Cloud Workspace Setup

- A Terraform Cloud account. You can sign up for a free tier.

- An organization within Terraform Cloud.

- A new workspace for your Service Catalog products. Connect this workspace to a VCS repository (e.g., GitHub) where your Terraform configurations will reside.

- Configure AWS credentials in your Terraform Cloud workspace. This can be done via environment variables (e.g.,

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY,AWS_SESSION_TOKEN) or by using AWS assumed roles directly within Terraform Cloud.

Example of setting environment variables in Terraform Cloud workspace:

- Go to your workspace settings.

- Navigate to “Environment Variables”.

- Add

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEYas sensitive variables. - Optionally, add

AWS_REGION.

IAM Permissions for Service Catalog

You’ll need specific IAM permissions:

- For the Terraform User/Role: Permissions to create/manage Service Catalog resources, IAM roles, and the resources provisioned by your products.

- For the Service Catalog Launch Role: This is an IAM role that AWS Service Catalog assumes to provision resources. It needs permissions to create all resources defined in your product’s Terraform configuration. This role will be specified in the “Launch Constraint” for your portfolio.

- For the End-User: Permissions to access and provision products from the Service Catalog UI. Typically, this involves

servicecatalog:List*,servicecatalog:Describe*, andservicecatalog:ProvisionProduct.

Step-by-Step Implementation: Creating a Simple Product

Let’s walk through creating a simple S3 bucket product in AWS Service Catalog using Terraform Cloud. This will involve defining the S3 bucket in Terraform, packaging it as a Service Catalog product, and making it available through a portfolio.

Defining the Product in Terraform (Example: S3 Bucket)

First, we’ll create a reusable Terraform module for our S3 bucket. This module will be the “product” that users can provision.

Terraform Module for S3 Bucket

Create a directory structure like this in your VCS repository:

my-service-catalog-products/

├── s3-bucket-product/

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

└── main.tf

└── versions.tf

my-service-catalog-products/s3-bucket-product/main.tf:

resource "aws_s3_bucket" "this" {

bucket = var.bucket_name

acl = var.acl

tags = merge(

var.tags,

{

"ManagedBy" = "ServiceCatalog"

"Product" = "S3Bucket"

}

)

}

resource "aws_s3_bucket_public_access_block" "this" {

bucket = aws_s3_bucket.this.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

output "bucket_id" {

description = "The name of the S3 bucket."

value = aws_s3_bucket.this.id

}

output "bucket_arn" {

description = "The ARN of the S3 bucket."

value = aws_s3_bucket.this.arn

}

my-service-catalog-products/s3-bucket-product/variables.tf:

variable "bucket_name" {

description = "Desired name of the S3 bucket."

type = string

}

variable "acl" {

description = "Canned ACL to apply to the S3 bucket. Private is recommended."

type = string

default = "private"

validation {

condition = contains(["private", "public-read", "public-read-write", "aws-exec-read", "authenticated-read", "bucket-owner-read", "bucket-owner-full-control", "log-delivery-write"], var.acl)

error_message = "Invalid ACL provided. Must be one of the AWS S3 canned ACLs."

}

}

variable "tags" {

description = "A map of tags to assign to the bucket."

type = map(string)

default = {}

}

Now, we need a root Terraform configuration that will define the Service Catalog product and portfolio. This will reside in the main directory.

my-service-catalog-products/versions.tf:

terraform {

required_version = ">= 1.0.0"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

cloud {

organization = "your-tfc-org-name" # Replace with your Terraform Cloud organization name

workspaces {

name = "service-catalog-products-workspace" # Replace with your Terraform Cloud workspace name

}

}

}

provider "aws" {

region = "us-east-1" # Or your desired region

}

my-service-catalog-products/main.tf (This is where the Service Catalog resources will be defined):

# IAM Role for Service Catalog to launch products

resource "aws_iam_role" "servicecatalog_launch_role" {

name = "ServiceCatalogLaunchRole"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "servicecatalog.amazonaws.com"

}

},

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

AWS = data.aws_caller_identity.current.account_id # Allows current account to assume this role for testing

}

}

]

})

}

resource "aws_iam_role_policy" "servicecatalog_launch_policy" {

name = "ServiceCatalogLaunchPolicy"

role = aws_iam_role.servicecatalog_launch_role.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = ["s3:*", "iam:GetRole", "iam:PassRole"], # Grant necessary permissions for S3 product

Effect = "Allow",

Resource = "*"

},

# Add other permissions as needed for more complex products

]

})

}

data "aws_caller_identity" "current" {}

Creating an AWS Service Catalog Product in Terraform Cloud

Now, let’s define the AWS Service Catalog product using Terraform. This product will point to our S3 bucket module.

Add the following to my-service-catalog-products/main.tf:

resource "aws_servicecatalog_product" "s3_bucket_product" {

name = "Standard S3 Bucket"

owner = "IT Operations"

type = "CLOUD_FORMATION_TEMPLATE" # Service Catalog still requires this type, but it provisions Terraform-managed resources via CloudFormation

description = "Provisions a private S3 bucket with public access blocked."

distributor = "Cloud Engineering"

support_email = "cloud-support@example.com"

support_url = "https://wiki.example.com/s3-bucket-product"

provisioning_artifact_parameters {

template_type = "TERRAFORM_OPEN_SOURCE" # This is the crucial part for Terraform

name = "v1.0"

description = "Initial version of the S3 Bucket product."

# The INFO property defines how Service Catalog interacts with Terraform Cloud

info = {

"CloudFormationTemplate" = jsonencode({

AWSTemplateFormatVersion = "2010-09-09"

Description = "AWS Service Catalog product for a Standard S3 Bucket (managed by Terraform Cloud)"

Parameters = {

BucketName = {

Type = "String"

Description = "Desired name for the S3 bucket (must be globally unique)."

}

BucketAcl = {

Type = "String"

Description = "Canned ACL to apply to the S3 bucket. (e.g., private, public-read)"

Default = "private"

}

TagsJson = {

Type = "String"

Description = "JSON string of tags for the S3 bucket (e.g., {\"Project\":\"MyProject\"})"

Default = "{}"

}

}

Resources = {

TerraformProvisioner = {

Type = "Community::Terraform::TFEProduct" # This is a placeholder type. In reality, you'd use a custom resource for TFC integration

Properties = {

WorkspaceId = "ws-xxxxxxxxxxxxxxxxx" # Placeholder: You would dynamically get this or embed it from TFC API

BucketName = { "Ref" : "BucketName" }

BucketAcl = { "Ref" : "BucketAcl" }

TagsJson = { "Ref" : "TagsJson" }

# ... other Terraform variables passed as parameters

}

}

}

Outputs = {

BucketId = {

Description = "The name of the provisioned S3 bucket."

Value = { "Fn::GetAtt" : ["TerraformProvisioner", "BucketId"] }

}

BucketArn = {

Description = "The ARN of the provisioned S3 bucket."

Value = { "Fn::GetAtt" : ["TerraformProvisioner", "BucketArn"] }

}

}

})

}

}

}

Important Note on `Community::Terraform::TFEProduct` and `info` property:

The above code snippet for `aws_servicecatalog_product` illustrates the *concept* of how Service Catalog interacts with Terraform. In a real-world scenario, the `info` property’s `CloudFormationTemplate` would point to an AWS CloudFormation template that contains a Custom Resource (e.g., using Lambda) or a direct integration that calls the Terraform Cloud API to perform the `terraform apply`. AWS provides official documentation and reference architectures for integrating with Terraform Open Source which also applies to Terraform Cloud via its API. This typically involves:

- A CloudFormation template that defines the parameters.

- A Lambda function that receives these parameters, interacts with the Terraform Cloud API (e.g., by creating a new run for a specific workspace, passing variables), and reports back the status to CloudFormation.

For simplicity and clarity of the core Terraform Cloud integration, the provided `info` block above uses a conceptual `Community::Terraform::TFEProduct` type. In a full implementation, you would replace this with the actual CloudFormation template that invokes your Terraform Cloud workspace via an intermediary Lambda function.

Creating an AWS Service Catalog Portfolio

Next, define a portfolio to hold our S3 product.

Add the following to my-service-catalog-products/main.tf:

resource "aws_servicecatalog_portfolio" "dev_portfolio" {

name = "Dev Team Portfolio"

description = "Products approved for Development teams"

provider_name = "Cloud Engineering"

}

Associating Product with Portfolio

Link the product to the portfolio.

Add the following to my-service-catalog-products/main.tf:

resource "aws_servicecatalog_portfolio_product_association" "s3_product_assoc" {

portfolio_id = aws_servicecatalog_portfolio.dev_portfolio.id

product_id = aws_servicecatalog_product.s3_bucket_product.id

}

Granting Launch Permissions

This is critical for security. We’ll use a Launch Constraint to specify the IAM role AWS Service Catalog will assume to provision the S3 bucket.

Add the following to my-service-catalog-products/main.tf:

resource "aws_servicecatalog_service_action" "s3_provision_action" {

name = "Provision S3 Bucket"

description = "Action to provision a standard S3 bucket."

definition {

name = "TerraformRun" # This should correspond to a TFC run action

# The actual definition here would involve a custom action that

# triggers a Terraform Cloud run or an equivalent mechanism.

# For a fully managed setup, this would be part of the Custom Resource logic.

# For now, we'll keep it simple and assume the Lambda-backed CFN handles it.

}

}

resource "aws_servicecatalog_constraint" "s3_launch_constraint" {

description = "Launch constraint for S3 Bucket product"

portfolio_id = aws_servicecatalog_portfolio.dev_portfolio.id

product_id = aws_servicecatalog_product.s3_bucket_product.id

type = "LAUNCH"

parameters = jsonencode({

RoleArn = aws_iam_role.servicecatalog_launch_role.arn

})

}

# Grant end-user access to the portfolio

resource "aws_servicecatalog_portfolio_share" "dev_portfolio_share" {

portfolio_id = aws_servicecatalog_portfolio.dev_portfolio.id

account_id = data.aws_caller_identity.current.account_id # Share with the same account for testing

# Optionally, you can add an OrganizationNode for sharing across AWS Organizations

}

# Example of an IAM role for an end-user to access the portfolio and launch products

resource "aws_iam_role" "end_user_role" {

name = "ServiceCatalogEndUserRole"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

AWS = data.aws_caller_identity.current.account_id

}

}

]

})

}

resource "aws_iam_role_policy_attachment" "end_user_sc_access" {

role = aws_iam_role.end_user_role.name

policy_arn = "arn:aws:iam::aws:policy/AWSServiceCatalogEndUserFullAccess" # Use full access for demo, restrict in production

}

Commit these Terraform files to your VCS repository. Terraform Cloud, configured with the correct workspace and VCS integration, will detect the changes and initiate a plan. Once approved and applied, your AWS Service Catalog will be populated with the defined product and portfolio.

When an end-user navigates to the AWS Service Catalog console, they will see the “Dev Team Portfolio” and the “Standard S3 Bucket” product. When they provision it, the Service Catalog will trigger the underlying CloudFormation stack, which in turn calls Terraform Cloud (via the custom resource/Lambda function) to execute the Terraform configuration defined in your S3 module, provisioning the S3 bucket.

Advanced Scenarios and Best Practices

Versioning Products

Infrastructure evolves. AWS Service Catalog and Terraform Cloud handle this gracefully:

- Terraform Cloud Modules: Maintain different versions of your Terraform modules in a private module registry or by tagging your Git repository.

- Service Catalog Provisioning Artifacts: When your Terraform module changes, create a new provisioning artifact (e.g., v2.0) for your AWS Service Catalog product. This allows users to choose which version to deploy and enables seamless updates of existing provisioned products.

Using Launch Constraints

Always use launch constraints. This is a fundamental security practice. The IAM role specified in the launch constraint should have only the minimum necessary permissions to create the resources defined in your product’s Terraform configuration. This ensures that end-users, who only have permission to provision a product, cannot directly perform privileged actions in AWS.

Parameterization with Terraform Variables

Leverage Terraform variables to make your Service Catalog products flexible. For example, the S3 bucket product had `bucket_name` and `acl` as variables. These translate into input parameters that users see when provisioning the product in AWS Service Catalog. Carefully define variable types, descriptions, and validations to guide users.

Integrating with CI/CD Pipelines

Terraform Cloud is designed for CI/CD integration:

- VCS-Driven Workflow: Any pull request or merge to your main branch (connected to a Terraform Cloud workspace) can trigger a `terraform plan` for review. Merges can automatically trigger `terraform apply`.

- Terraform Cloud API: For more complex scenarios, use the Terraform Cloud API to programmatically trigger runs, check statuses, and manage workspaces, allowing custom CI/CD pipelines to manage your Service Catalog products and their underlying Terraform code.

Tagging and Cost Allocation

Implement a robust tagging strategy. Use Service Catalog TagOption constraints to automatically apply standardized tags (e.g., CostCenter, Project, Owner) to all resources provisioned through Service Catalog. Combine this with Terraform’s ability to propagate tags throughout resources to ensure comprehensive cost allocation and resource management.

Example TagOption Constraint (in `main.tf`):

resource "aws_servicecatalog_tag_option" "project_tag" {

key = "Project"

value = "MyCloudProject"

}

resource "aws_servicecatalog_tag_option_association" "project_tag_assoc" {

tag_option_id = aws_servicecatalog_tag_option.project_tag.id

resource_id = aws_servicecatalog_portfolio.dev_portfolio.id # Associate with portfolio

}

Troubleshooting Common Issues

IAM Permissions

This is the most frequent source of errors. Ensure that:

- The Terraform Cloud user/role has permissions to create/manage Service Catalog, IAM roles, and all target resources.

- The Service Catalog Launch Role has permissions for all actions required by your product’s Terraform configuration (e.g., `s3:CreateBucket`, `ec2:RunInstances`).

- End-users have `servicecatalog:ProvisionProduct` and necessary `servicecatalog:List*` permissions.

Always review AWS CloudTrail logs and Terraform Cloud run logs for specific permission denied errors.

Product Provisioning Failures

If a provisioned product fails, check:

- Terraform Cloud Run Logs: Access the specific run in Terraform Cloud that was triggered by Service Catalog. This will show `terraform plan` and `terraform apply` output, including any errors.

- AWS CloudFormation Stack Events: In the AWS console, navigate to CloudFormation. Each provisioned product creates a stack. The events tab will show the failure reason, often indicating issues with the custom resource or the Lambda function integrating with Terraform Cloud.

- Input Parameters: Verify that the parameters passed from Service Catalog to your Terraform configuration are correct and in the expected format.

Terraform State Management

Ensure that each Service Catalog product instance corresponds to a unique and isolated Terraform state file. Terraform Cloud workspaces inherently provide this isolation. Avoid sharing state files between different provisioned products, as this can lead to conflicts and unexpected changes.

Frequently Asked Questions

What is the difference between AWS Service Catalog and AWS CloudFormation?

AWS CloudFormation is an Infrastructure as Code (IaC) service for defining and provisioning AWS infrastructure resources using templates. AWS Service Catalog is a service that allows organizations to create and manage catalogs of IT services (which can be defined by CloudFormation templates or Terraform configurations) approved for use on AWS. Service Catalog sits on top of IaC tools like CloudFormation or Terraform to provide governance, self-service, and standardization for end-users.

Can I use Terraform Open Source directly with AWS Service Catalog without Terraform Cloud?

Yes, it’s possible, but it requires more effort to manage state, provide execution environments, and integrate with Service Catalog. You would typically use a custom resource in a CloudFormation template that invokes a Lambda function. This Lambda function would then run Terraform commands (e.g., using a custom-built container with Terraform) and manage its state (e.g., in S3). Terraform Cloud simplifies this significantly by providing a managed service for remote operations, state, and VCS integration.

How does AWS Service Catalog handle updates to provisioned products?

When you update your Terraform configuration (e.g., create a new version of your S3 bucket module), you create a new “provisioning artifact” (version) for your AWS Service Catalog product. End-users can then update their existing provisioned products to this new version directly from the Service Catalog UI. Service Catalog will trigger the underlying update process via CloudFormation/Terraform Cloud.

What are the security best practices when integrating Service Catalog with Terraform Cloud?

Key best practices include:

- Least Privilege: Ensure the Service Catalog Launch Role has only the minimum necessary permissions.

- Secrets Management: Use AWS Secrets Manager or Parameter Store for any sensitive data, and reference them in your Terraform configuration. Do not hardcode secrets.

- VCS Security: Protect your Terraform code repository with branch protections and code reviews.

- Terraform Cloud Permissions: Implement RBAC within

Thank you for reading the DevopsRoles page!