Infrastructure as Code (IaC) has become an indispensable practice for managing and deploying cloud infrastructure efficiently and reliably. Terraform, HashiCorp’s popular IaC tool, empowers developers and DevOps engineers to define and provision infrastructure resources in a declarative manner. Integrating Terraform into a robust CI/CD pipeline is crucial for automating deployments, ensuring consistency, and reducing human error.

This comprehensive guide dives into implementing Terraform CI/CD and testing on AWS, leveraging the power of the new Terraform Test Framework to enhance your infrastructure management workflow. We’ll cover everything from setting up a basic pipeline to implementing advanced testing strategies, equipping you with the knowledge to build a reliable and efficient infrastructure deployment process.

Table of Contents

Understanding Terraform CI/CD

Continuous Integration/Continuous Delivery (CI/CD) is a set of practices that automate the process of building, testing, and deploying software. When applied to infrastructure, CI/CD ensures that infrastructure changes are deployed consistently and reliably, minimizing the risk of errors and downtime. A typical Terraform CI/CD pipeline involves the following stages:

Key Stages of a Terraform CI/CD Pipeline:

- Code Commit: Developers commit Terraform configuration code to a version control system (e.g., Git).

- Build: The CI system detects code changes and initiates a build process, which might involve linting and validating the Terraform code.

- Test: Automated tests are executed to validate the Terraform configuration. This is where the new Terraform Test Framework plays a vital role.

- Plan: Terraform generates an execution plan, outlining the changes that will be made to the infrastructure.

- Apply: Terraform applies the changes to the AWS infrastructure, provisioning or modifying resources.

- Destroy (Optional): In certain scenarios (e.g., testing environments), a destroy step can automatically tear down the infrastructure after testing.

Leveraging the New Terraform Test Framework

The Terraform Test Framework is a powerful tool that allows you to write automated tests for your Terraform configurations. This framework facilitates testing the correctness and behavior of your infrastructure code before deployment, significantly reducing the risk of errors in production. It enables you to:

Benefits of the Terraform Test Framework:

- Verify Infrastructure State: Assert the desired state of your infrastructure after applying your Terraform code.

- Test Configuration Changes: Ensure that changes to your Terraform configurations have the expected effect.

- Improve Code Quality: Encourage writing more robust, maintainable, and testable Terraform code.

- Reduce Deployment Risks: Identify and fix potential issues early in the development cycle, reducing the chance of errors in production.

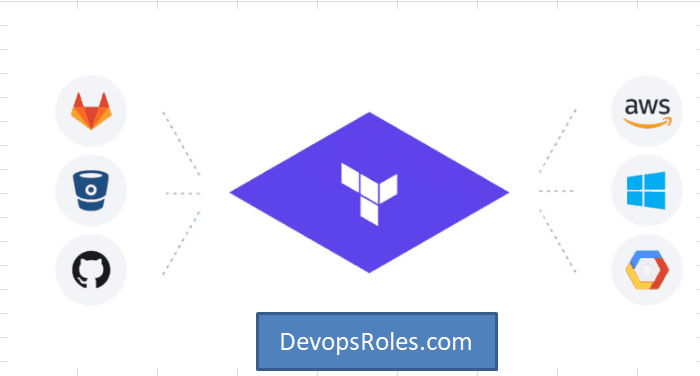

Integrating Terraform with AWS and CI/CD Tools

To implement Terraform CI/CD on AWS, you’ll typically use a CI/CD tool such as Jenkins, GitHub Actions, GitLab CI, or AWS CodePipeline. These tools integrate seamlessly with Terraform, automating the execution of Terraform commands as part of your pipeline.

Example: Setting up a basic Terraform CI/CD pipeline with GitHub Actions:

A simplified GitHub Actions workflow could look like this:

name: Terraform AWS Deployment

on: push

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Setup Terraform

uses: hashicorp/setup-terraform@v2

- name: Terraform Init

run: terraform init

- name: Terraform Plan

run: terraform plan -out=tfplan

- name: Terraform Apply

run: terraform apply tfplan

This workflow checks out the code, initializes Terraform, creates a plan, and applies the changes. For production environments, adding a testing stage using the Terraform Test Framework is crucial.

Implementing Terraform Testing with Practical Examples

Let’s explore practical examples demonstrating how to use the Terraform Test Framework for various scenarios.

Example 1: Basic Resource Existence Test

This test verifies that an EC2 instance exists after applying the Terraform configuration:

package main

import (

"testing"

"github.com/stretchr/testify/assert"

"github.com/hashicorp/terraform-plugin-sdk/v2/helper/resource"

)

func TestEC2InstanceExists(t *testing.T) {

resource.Test(t, resource.TestCase{

PreCheck: func() { /* ... */ },

Providers: providers(),

Steps: []resource.TestStep{

{

Config: `

resource "aws_instance" "example" {

ami = "ami-0c55b31ad2299a701" # Replace with your AMI ID

instance_type = "t2.micro"

}

`,

Check: resource.ComposeTestCheckFunc(

resource.TestCheckResourceAttr("aws_instance.example", "instance_state", "running"),

),

},

},

})

}

Example 2: Testing Output Values

This example tests whether the Terraform output value matches the expected value:

func TestOutputValue(t *testing.T) {

resource.Test(t, resource.TestCase{

PreCheck: func() { /* pre-check logic */ },

Providers: providers(),

Steps: []resource.TestStep{

{

Config: `

resource "aws_instance" "example" {

ami = "ami-0c55b31ad2299a701"

instance_type = "t2.micro"

}

output "instance_id" {

value = aws_instance.example.id

}

`,

Check: resource.ComposeTestCheckFunc(

resource.TestCheckOutputSet("instance_id"), //

),

},

},

})

}

Example 3: Advanced Testing with Custom Assertions

For more complex scenarios, you can create custom assertions to check specific aspects of your infrastructure.

// ... (imports: "testing", "github.com/hashicorp/terraform-plugin-sdk/v2/helper/resource", "github.com/hashicorp/terraform-plugin-sdk/v2/terraform", "fmt") ...

func TestCustomAssertion(t *testing.T) {

resource.Test(t, resource.TestCase{

PreCheck: func() { /* validate preconditions */ },

Providers: providers(),

Steps: []resource.TestStep{

{

Config: `

resource "aws_instance" "example" {

ami = "ami-0c55b31ad2299a701"

instance_type = "t2.micro"

}

`,

Check: resource.ComposeTestCheckFunc(

func(s *terraform.State) error {

rs, ok := s.RootModule().Resources["aws_instance.example"]

if !ok {

return fmt.Errorf("resource aws_instance.example not found")

}

if rs.Primary.ID == "" {

return fmt.Errorf("expected instance ID to be set")

}

// Custom assertion logic: for example, check ID prefix

if len(rs.Primary.ID) < 2 || rs.Primary.ID[0] != 'i' {

return fmt.Errorf("instance ID %q does not look like a valid EC2 ID", rs.Primary.ID)

}

// Optional: perform DB lookup, external API call, etc.

// e.g., validate the instance ID exists in a mock service

return nil

},

),

},

},

})

}

Frequently Asked Questions (FAQ)

Q1: What are the best practices for writing Terraform tests?

Best practices include writing small, focused tests, using clear and descriptive names, and organizing tests into logical groups. Prioritize testing critical infrastructure components and avoid over-testing.

Q2: How can I integrate the Terraform Test Framework into my existing CI/CD pipeline?

You can integrate the tests by adding a testing stage to your CI/CD workflow. Your CI/CD tool will execute the test suite before applying Terraform changes. Failure in the tests should halt the deployment process.

Q3: What are some common testing pitfalls to avoid?

Common pitfalls include writing tests that are too complex, not adequately covering edge cases, and neglecting to test dependencies. Ensure comprehensive testing covers both happy path and failure scenarios.

Q4: Can I use the Terraform Test Framework for testing resources outside of AWS?

Yes, the Terraform Test Framework is not limited to AWS. It can be used to test configurations for various cloud providers and on-premise infrastructure.

Conclusion

In the era of modern DevOps, infrastructure deployment is no longer a manual, isolated task—it has evolved into an automated, testable, and reusable process. This article has shed light on how the powerful combination of Terraform, CI/CD pipelines, and the Terraform Test Framework can significantly enhance the reliability, efficiency, and quality of infrastructure management on AWS.

By setting up a professional CI/CD pipeline that integrates essential steps such as terraform init, plan, apply, and, crucially, automated testing written in Go, you can:

- Minimize deployment risks,

- Catch errors early in the development lifecycle, and

- Ensure infrastructure configurations remain under strict control.

Moreover, the Terraform Test Framework offers more than just basic resource checks (e.g., EC2 instances or output values). It empowers teams to create custom test assertions for complex logic, marking a major advancement toward treating infrastructure as fully testable software.

In conclusion, if you’re aiming to build a professional, safe, and verifiable deployment workflow, the integration of Terraform + CI/CD + Test Framework is your strategic foundation. It’s not just a DevOps toolchain-it’s a roadmap to the future of resilient and scalable infrastructure operations. Thank you for reading the DevopsRoles page!