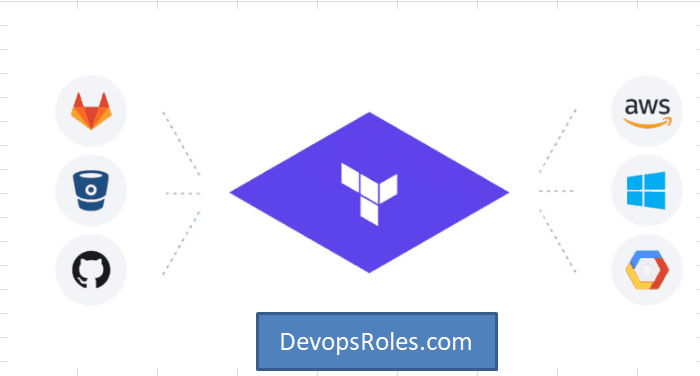

Managing infrastructure as code (IaC) with Terraform is a cornerstone of modern DevOps practices. However, as your infrastructure grows in complexity, so does the need for robust state management. This is where the concept of Terraform Remote State becomes critical. This article dives deep into leveraging AWS S3 and DynamoDB for storing your Terraform state, ensuring security, scalability, and collaboration across teams. We will explore the intricacies of configuring and managing your Terraform Remote State, enabling you to build and deploy infrastructure efficiently and reliably.

Table of Contents

Understanding Terraform State

Terraform utilizes a state file to track the current infrastructure configuration. This file maintains a complete record of all managed resources, including their properties and relationships. While perfectly adequate for small projects, managing the state file locally becomes problematic as projects scale. This is where a Terraform Remote State backend comes into play. Storing your state remotely offers significant advantages, including:

- Collaboration: Multiple team members can work simultaneously on the same infrastructure.

- Version Control: Track changes and revert to previous states if needed.

- Scalability: Easily handle large and complex infrastructures.

- Security: Implement robust access control to prevent unauthorized modifications.

Choosing a Remote Backend: AWS S3 and DynamoDB

AWS S3 (Simple Storage Service) and DynamoDB (NoSQL database) are a powerful combination for managing Terraform Remote State. S3 provides durable and scalable object storage, while DynamoDB ensures efficient state locking, preventing concurrent modifications and ensuring data consistency. This pairing is a popular and reliable choice for many organizations.

S3: Object Storage for State Data

S3 acts as the primary storage location for your Terraform state file. Its durability and scalability make it ideal for handling potentially large state files as your infrastructure grows. The immutability of objects in S3 also provides a level of versioning, although it’s crucial to use DynamoDB for locking to manage concurrency.

DynamoDB: Locking Mechanism for Concurrent Access

DynamoDB serves as a locking mechanism to protect against concurrent modifications to the Terraform state file. This is crucial for preventing conflicts when multiple team members are working on the same infrastructure. DynamoDB’s high availability and low latency ensure that lock acquisition and release are fast and reliable. Without a lock mechanism like DynamoDB, you risk data corruption from concurrent writes to your S3 state file.

Configuring Terraform Remote State with S3 and DynamoDB

Configuring your Terraform Remote State backend requires modifying your main.tf or terraform.tfvars file. The following configuration illustrates how to use S3 and DynamoDB:

terraform {

backend "s3" {

bucket = "your-terraform-state-bucket"

key = "path/to/your/state/file.tfstate"

region = "your-aws-region"

dynamodb_table = "your-dynamodb-lock-table"

}

}

Replace the placeholders:

your-terraform-state-bucket: The name of your S3 bucket.path/to/your/state/file.tfstate: The path within the S3 bucket where the state file will be stored.your-aws-region: The AWS region where your S3 bucket and DynamoDB table reside.your-dynamodb-lock-table: The name of your DynamoDB table used for locking.

Before running this configuration, ensure you have:

- An AWS account with appropriate permissions.

- An S3 bucket created in the specified region.

- A DynamoDB table created with the appropriate schema (a simple table with a primary key is sufficient). Ensure your IAM role has the necessary permissions to access this table.

Advanced Configuration and Best Practices

Optimizing your Terraform Remote State setup involves considering several best practices:

IAM Roles and Permissions

Restrict access to your S3 bucket and DynamoDB table to only authorized users and services. This is paramount for security. Create an IAM role specifically for Terraform, granting it only the necessary permissions to read and write to the state backend. Avoid granting overly permissive roles.

Encryption

Enable server-side encryption (SSE) for your S3 bucket to protect your state file data at rest. This adds an extra layer of security to your infrastructure.

Versioning

While S3 object versioning doesn’t directly integrate with Terraform’s state management in the way DynamoDB locking does, utilizing S3 versioning provides a safety net against accidental deletion or corruption of your state files. Always ensure backups of your state are maintained elsewhere if critical business functions rely on them.

Lifecycle Policies

Implement lifecycle policies for your S3 bucket to manage the storage class of your state files. This can help reduce storage costs by archiving older state files to cheaper storage tiers.

Workspaces

Terraform workspaces enable the management of multiple environments (e.g., development, staging, production) from a single state file. This helps isolate configurations and prevents accidental changes across environments. Each workspace will have its own state file within the same S3 bucket and DynamoDB lock table.

Frequently Asked Questions

If DynamoDB is unavailable, Terraform will be unable to acquire a lock on the state file, preventing any modifications. This ensures data consistency, though it will temporarily halt any Terraform operations attempting to write to the state.

Q2: Can I use other backends besides S3 and DynamoDB?

Yes, Terraform supports various remote backends, including Azure Blob Storage, Google Cloud Storage, and more. The choice depends on your cloud provider and infrastructure setup. The S3 and DynamoDB combination is popular due to AWS’s prevalence and mature services.

Q3: How do I recover my Terraform state if it’s corrupted?

Regular backups are crucial. If corruption occurs despite the locking mechanisms, you may need to restore from a previous backup. S3 versioning can help recover earlier versions of the state, but relying solely on versioning is risky; a dedicated backup strategy is always advised.

Q4: Is using S3 and DynamoDB for Terraform Remote State expensive?

The cost depends on your usage. S3 storage costs are based on the amount of data stored and the storage class used. DynamoDB costs are based on read and write capacity units consumed. For most projects, the costs are relatively low, especially compared to the potential costs of downtime or data loss from inadequate state management.

Conclusion

Effectively managing your Terraform Remote State is crucial for building and maintaining robust and scalable infrastructure. Using AWS S3 and DynamoDB provides a secure, scalable, and collaborative solution for your Terraform Remote State. By following the best practices outlined in this article, including proper IAM configuration, encryption, and regular backups, you can confidently manage even the most complex infrastructure deployments. Remember to always prioritize security and consider the potential costs and strategies for maintaining your Terraform Remote State.

For further reading, refer to the official Terraform documentation on remote backends: Terraform S3 Backend Documentation and the AWS documentation on S3 and DynamoDB: AWS S3 Documentation, AWS DynamoDB Documentation. Thank you for reading the DevopsRoles page!