Establishing secure access to your AWS resources is paramount. Traditional methods often lack the granularity and automation needed for modern cloud environments. This article delves into leveraging Terraform AWS Verified Access with Google OIDC (OpenID Connect) to create a robust, automated, and highly secure access control solution. We’ll guide you through the process, from initial setup to advanced configurations, ensuring you understand how to implement Terraform AWS Verified Access effectively.

Table of Contents

- 1 Understanding AWS Verified Access and OIDC

- 2 Setting up Google OIDC

- 3 Implementing Terraform AWS Verified Access

- 4 Terraform AWS Verified Access: Best Practices

- 5 Frequently Asked Questions

- 5.1 Q1: Can I use AWS Verified Access with other identity providers besides Google OIDC?

- 5.2 Q2: How do I manage access to specific AWS resources using AWS Verified Access?

- 5.3 Q3: What happens if a user’s device doesn’t meet the specified device policy requirements?

- 5.4 Q4: How can I monitor the activity and logs of AWS Verified Access?

- 6 Conclusion

Understanding AWS Verified Access and OIDC

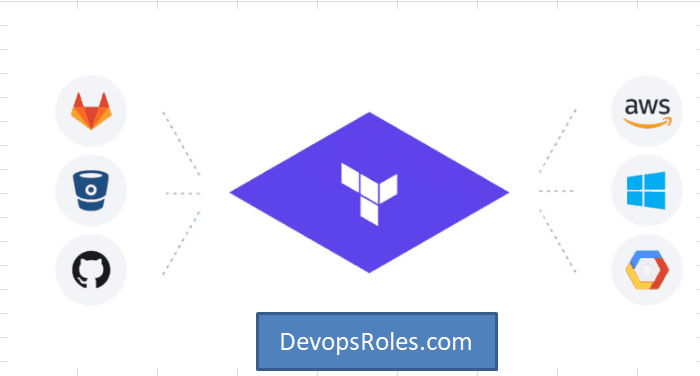

AWS Verified Access is a fully managed service that enables secure, zero-trust access to your AWS resources. It verifies the identity and posture of users and devices before granting access, minimizing the attack surface. Integrating it with Google OIDC enhances security by leveraging Google’s robust identity and access management (IAM) system. This approach eliminates the need to manage and rotate numerous AWS IAM credentials, simplifying administration and improving security.

Key Benefits of Using AWS Verified Access with Google OIDC

- Enhanced Security: Leverages Google’s secure authentication mechanisms.

- Simplified Management: Centralized identity management through Google Workspace or Cloud Identity.

- Automation: Terraform enables Infrastructure as Code (IaC), automating the entire deployment process.

- Zero Trust Model: Access is granted based on identity and posture, not network location.

- Improved Auditability: Detailed logs provide comprehensive audit trails.

Setting up Google OIDC

Before configuring Terraform AWS Verified Access, you need to set up your Google OIDC provider. This involves creating a service account in your Google Cloud project and generating its credentials.

Creating a Google Service Account

- Navigate to the Google Cloud Console and select your project.

- Go to IAM & Admin > Service accounts.

- Click “CREATE SERVICE ACCOUNT”.

- Provide a name (e.g., “aws-verified-access”).

- Assign the “Cloud Identity and Access Management (IAM) Admin” role. Adjust roles based on your specific needs.

- Click “Create”.

- Download the JSON key file. Keep this file secure; it contains sensitive information.

Configuring the Google OIDC Provider

You’ll need the Client ID from your Google service account JSON key file. This will be used in your Terraform configuration.

Implementing Terraform AWS Verified Access

Now, let’s build the Terraform AWS Verified Access infrastructure using the Google OIDC provider. This example assumes you have already configured your AWS credentials for Terraform.

Terraform Code for AWS Verified Access

resource "aws_verified_access_trust_provider" "google_oidc" {

name = "google-oidc-provider"

provider_type = "oidc"

server_url = "https://accounts.google.com/.well-known/openid-configuration"

client_id = "YOUR_GOOGLE_CLIENT_ID" # Replace with your Client ID

issuer_url = "https://accounts.google.com"

}

resource "aws_verified_access_instance" "example" {

name = "example-instance"

trust_providers_ids = [aws_verified_access_trust_provider.google_oidc.id]

device_policy {

allowed_device_types = ["MOBILE", "DESKTOP"]

}

}

Remember to replace YOUR_GOOGLE_CLIENT_ID with your actual Google Client ID. This configuration creates an OIDC trust provider and an AWS Verified Access instance that uses the provider.

Advanced Configurations

This basic configuration can be expanded to include:

- Resource Policies: Define fine-grained access control to specific AWS resources.

- Custom Device Policies: Implement stricter device requirements for access.

- Conditional Access: Combine Verified Access with other security measures like MFA.

- Integration with other IAM systems: Extend your identity and access management to other providers.

Terraform AWS Verified Access: Best Practices

Implementing secure Terraform AWS Verified Access requires careful planning and execution. Following best practices ensures robust security and maintainability.

Security Best Practices

- Use the principle of least privilege: Grant only the necessary permissions.

- Regularly review and update your access policies.

- Monitor access logs and audit trails for suspicious activity.

- Store sensitive credentials securely, using secrets management tools.

IaC Best Practices

- Version control your Terraform code.

- Use a modular approach to manage your infrastructure.

- Employ automated testing to verify your configurations.

- Follow a structured deployment process.

Frequently Asked Questions

Q1: Can I use AWS Verified Access with other identity providers besides Google OIDC?

Yes, AWS Verified Access supports various identity providers, including SAML and other OIDC providers. You will need to adjust the Terraform configuration accordingly, using the relevant provider details.

Q2: How do I manage access to specific AWS resources using AWS Verified Access?

You manage resource access by defining resource policies associated with your Verified Access instance. These policies specify which resources are accessible and under what conditions. These policies are often expressed using IAM policies within the Terraform configuration.

Q3: What happens if a user’s device doesn’t meet the specified device policy requirements?

If a user’s device does not meet the specified requirements (e.g., OS version, security patches), access will be denied. The user will receive an appropriate error message indicating the reason for the denial.

Q4: How can I monitor the activity and logs of AWS Verified Access?

AWS CloudTrail logs all Verified Access activity. You can access these logs through the AWS Management Console or programmatically using the AWS SDKs. This provides a detailed audit trail for compliance and security monitoring.

Conclusion

Implementing Terraform AWS Verified Access with Google OIDC provides a powerful and secure way to manage access to your AWS resources. By leveraging the strengths of both services, you create a robust, automated, and highly secure infrastructure. Remember to carefully plan your implementation, follow best practices, and continuously monitor your environment to maintain optimal security. Effective use of Terraform AWS Verified Access significantly enhances your organization’s cloud security posture.

For further information, consult the official AWS Verified Access documentation: https://aws.amazon.com/verified-access/ and the Google Cloud documentation on OIDC: https://cloud.google.com/docs/authentication/production. Also consider exploring HashiCorp’s Terraform documentation for detailed examples and best practices: https://www.terraform.io/. Thank you for reading the DevopsRoles page!