Managing multiple AWS accounts can quickly become a complex and time-consuming task. Manually creating and configuring each account is inefficient, prone to errors, and scales poorly. This article dives deep into leveraging Account Factory for Terraform, a powerful tool that automates the entire process, significantly improving efficiency and reducing operational overhead. We’ll explore its capabilities, demonstrate practical examples, and address common questions to empower you to effectively manage your AWS infrastructure.

Table of Contents

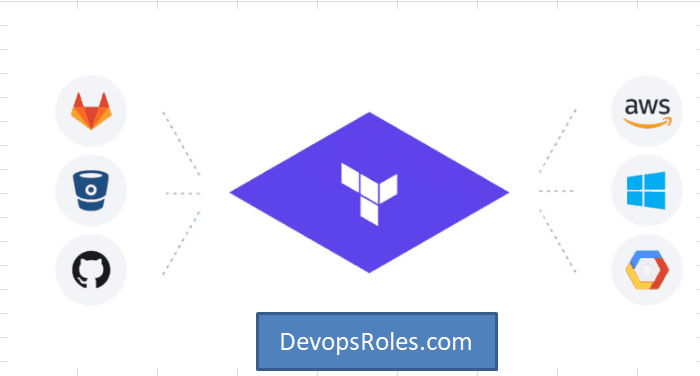

Understanding Account Factory for Terraform

Account Factory for Terraform is a robust solution that streamlines the creation and management of multiple AWS accounts. It utilizes Terraform’s infrastructure-as-code (IaC) capabilities, allowing you to define your account creation process in a declarative, version-controlled manner. This approach ensures consistency, repeatability, and auditable changes to your AWS landscape. Instead of tedious manual processes, you define the account specifications, and Account Factory handles the heavy lifting, automating the creation, configuration, and even the initial setup of essential services within each new account.

Key Features and Benefits

- Automation: Eliminate manual steps, saving time and reducing human error.

- Consistency: Ensure all accounts are created with the same configurations and policies.

- Scalability: Easily create and manage hundreds or thousands of accounts.

- Version Control: Track changes to your account creation process using Git.

- Idempotency: Repeated runs of the Terraform configuration will produce the same result without unintended side effects.

- Security: Implement robust security policies and controls from the outset.

Setting up Account Factory for Terraform

Before you begin, ensure you have the following prerequisites:

- An existing AWS account with appropriate permissions.

- Terraform installed and configured.

- AWS credentials configured for Terraform.

- A basic understanding of Terraform concepts and syntax.

Step-by-Step Guide

- Install the necessary providers: You’ll need the AWS provider and potentially others depending on your requirements. You can add them to your

providers.tffile:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.0"

}

}

} - Define account specifications: Create a Terraform configuration file (e.g.,

main.tf) to define the parameters for your new AWS accounts. This will include details like the account name, email address, and any required tags. This part will vary heavily depending on your specific needs and the Account Factory implementation you are using. - Apply the configuration: Run

terraform applyto create the AWS accounts. This command will initiate the creation process based on your specifications in the Terraform configuration file. - Monitor the process: Observe the output of the

terraform applycommand to track the progress of account creation. Account Factory will handle many of the intricacies of AWS account creation, including the often tedious process of verifying email addresses. - Manage and update: Leverage Terraform’s state management to track and update your AWS accounts. You can use `terraform plan` to see changes before applying them and `terraform destroy` to safely remove accounts when no longer needed.

Advanced Usage of Account Factory for Terraform

Beyond basic account creation, Account Factory for Terraform offers advanced capabilities to further enhance your infrastructure management:

Organizational Unit (OU) Management

Organize your AWS accounts into hierarchical OUs within your AWS Organizations structure for better governance and access control. Account Factory can automate the placement of newly created accounts into specific OUs based on predefined rules or tags.

Service Control Policies (SCPs)

Implement centralized security controls using SCPs, enforcing consistent security policies across all accounts. Account Factory can integrate with SCPs, ensuring that newly created accounts inherit the necessary security configurations.

Custom Configuration Modules

Develop custom Terraform modules to provision essential services within the newly created accounts. This might include setting up VPCs, IAM roles, or other fundamental infrastructure components. This allows you to streamline the initial configuration beyond just basic account creation.

Example Code Snippet (Illustrative):

This is a highly simplified example and will not function without significant additions and tailoring to your environment. It’s intended to provide a glimpse into the structure:

resource "aws_account" "example" {

name = "my-account"

email = "example@example.com"

parent_id = "some-parent-id" # If using AWS Organizations

}

Frequently Asked Questions

Q1: How does Account Factory handle account deletion?

Account Factory for Terraform integrates seamlessly with Terraform’s destroy command. By running `terraform destroy`, you can initiate the process of deleting accounts created via Account Factory. The specific steps involved may depend on your chosen configuration and any additional services deployed within the account.

Q2: What are the security implications of using Account Factory?

Security is paramount. Ensure you use appropriate IAM roles and policies to restrict access to your AWS environment and the Terraform configuration files. Employ the principle of least privilege, granting only the necessary permissions. Regularly review and update your security configurations to mitigate potential risks.

Q3: Can I use Account Factory for non-AWS cloud providers?

Account Factory is specifically designed for managing AWS accounts. While the underlying concept of automated account creation is applicable to other cloud providers, the implementation would require different tools and configurations adapted to the specific provider’s APIs and infrastructure.

Q4: How can I troubleshoot issues with Account Factory?

Thoroughly review the output of Terraform commands (`terraform apply`, `terraform plan`, `terraform output`). Pay attention to error messages, which often pinpoint the cause of problems. Refer to the official AWS and Terraform documentation for additional troubleshooting guidance. Utilize logging and monitoring tools to track the progress and identify any unexpected behaviour.

Conclusion

Implementing Account Factory for Terraform dramatically improves the efficiency and scalability of managing multiple AWS accounts. By automating the creation and configuration process, you can focus on higher-level tasks and reduce the risk of human error. Remember to prioritize security best practices throughout the process and leverage the advanced features of Account Factory to further optimize your AWS infrastructure management. Mastering Account Factory for Terraform is a key step towards robust and efficient cloud operations.

For further information, refer to the official Terraform documentation and the AWS documentation. You can also find helpful resources and community support on various online forums and developer communities.Thank you for reading the DevopsRoles page!