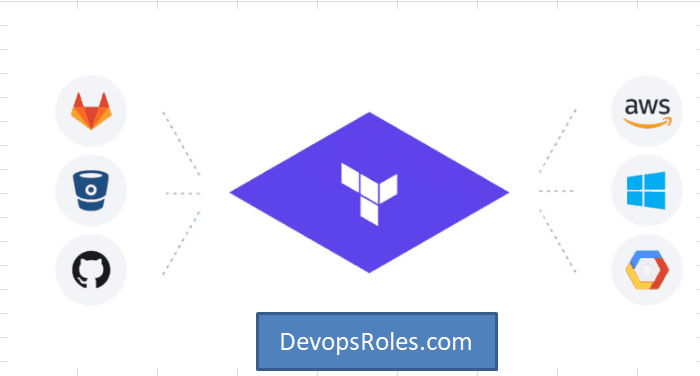

In today’s dynamic cloud computing landscape, efficient infrastructure management is paramount. Manually provisioning and managing cloud resources is time-consuming, error-prone, and ultimately inefficient. This is where Infrastructure as Code (IaC) solutions like Terraform shine. This comprehensive guide delves into the powerful combination of Vultr Cloud Terraform, demonstrating how to automate your Vultr deployments and significantly streamline your workflow. We’ll cover everything from basic setups to advanced configurations, enabling you to leverage the full potential of this robust pairing.

Understanding the Power of Vultr Cloud Terraform

Vultr Cloud Terraform allows you to define and manage your Vultr cloud infrastructure using declarative configuration files written in HashiCorp Configuration Language (HCL). Instead of manually clicking through web interfaces, you write code that describes your desired infrastructure state. Terraform then compares this desired state with the actual state of your Vultr environment and makes the necessary changes to bring them into alignment. This approach offers several key advantages:

- Automation: Automate the entire provisioning process, from creating instances to configuring networks and databases.

- Consistency: Ensure consistent infrastructure deployments across different environments (development, staging, production).

- Version Control: Track changes to your infrastructure as code using Git or other version control systems.

- Collaboration: Facilitate collaboration among team members through a shared codebase.

- Repeatability: Easily recreate your infrastructure from scratch whenever needed.

Setting up Your Vultr Cloud Terraform Environment

Before diving into code, we need to prepare our environment. This involves:

1. Installing Terraform

Download the appropriate Terraform binary for your operating system from the official HashiCorp website: https://www.terraform.io/downloads.html. Follow the installation instructions provided for your system.

2. Obtaining a Vultr API Key

You’ll need a Vultr API key to authenticate Terraform with your Vultr account. Generate a new API key within your Vultr account settings. Keep this key secure; it grants full access to your Vultr account.

3. Creating a Provider Configuration File

Terraform uses provider configurations to connect to different cloud platforms. Create a file named providers.tf (or include it within your main Terraform configuration file) and add the following, replacing YOUR_API_KEY with your actual Vultr API key:

terraform {

required_providers {

vultr = {

source = "vultr/vultr"

version = "~> 2.0"

}

}

}

provider "vultr" {

api_key = "YOUR_API_KEY"

}Creating Your First Vultr Cloud Terraform Resource: Deploying a Simple Instance

Let’s create a simple Terraform configuration to deploy a single Vultr instance. Create a file named main.tf:

resource "vultr_instance" "my_instance" {

region = "ewr"

type = "1c2g"

os_id = "289" # Ubuntu 20.04

name = "terraform-instance"

ssh_key_id = "YOUR_SSH_KEY_ID" #Replace with your Vultr SSH Key ID

}This configuration defines a single Vultr instance in the New Jersey (ewr) region with a basic 1 CPU and 2 GB RAM plan (1c2g). Replace YOUR_SSH_KEY_ID with the ID of your Vultr SSH key. The os_id specifies the operating system; you can find a list of available OS IDs in the Vultr API documentation: https://www.vultr.com/api/#operation/list-os

To deploy this instance, run the following commands:

terraform init

terraform plan

terraform applyterraform init initializes the Terraform working directory. terraform plan shows you what Terraform will do. terraform apply executes the plan, creating your Vultr instance.

Advanced Vultr Cloud Terraform Configurations

Beyond basic instance creation, Terraform’s power shines in managing complex infrastructure deployments. Here are some advanced scenarios:

Deploying Multiple Instances

You can easily deploy multiple instances using count or for_each meta-arguments:

resource "vultr_instance" "my_instances" {

count = 3

region = "ewr"

type = "1c2g"

os_id = "289" # Ubuntu 20.04

name = "terraform-instance-${count.index}"

ssh_key_id = "YOUR_SSH_KEY_ID" # Replace with your Vultr SSH Key ID

}Managing Networks and Subnets

Terraform can also create and manage Vultr networks and subnets, providing complete control over your network topology:

resource "vultr_private_network" "my_network" {

name = "my-private-network"

region = "ewr"

}

resource "vultr_instance" "my_instance" {

// ... other instance configurations ...

private_network_id = vultr_private_network.my_network.id

}Using Variables and Modules for Reusability

Utilize Terraform’s variables and modules to enhance reusability and maintainability. Variables allow you to parameterize your configurations, while modules encapsulate reusable components.

# variables.tf

variable "instance_type" {

type = string

default = "1c2g"

}

# main.tf

resource "vultr_instance" "my_instance" {

type = var.instance_type

// ... other configurations

}Implementing Security Best Practices with Vultr Cloud Terraform

Security is paramount when managing cloud resources. Implement the following best practices:

- Use Dedicated SSH Keys: Never hardcode SSH keys directly in your Terraform configuration. Use Vultr’s SSH Key management and reference the ID.

- Enable Security Groups: Configure appropriate security groups to restrict inbound and outbound traffic to your instances.

- Regularly Update Your Code: Maintain your Terraform configurations and update your Vultr instances to benefit from security patches.

- Store API Keys Securely: Never commit your Vultr API key directly to your Git repository. Explore secrets management solutions like HashiCorp Vault or AWS Secrets Manager.

Frequently Asked Questions

Q1: Can I use Terraform to manage existing Vultr resources?

Yes, Terraform’s import command allows you to import existing resources into your Terraform state. This allows you to bring existing Vultr resources under Terraform’s management.

Q2: How do I handle errors during Terraform deployments?

Terraform provides detailed error messages to identify the root cause of deployment failures. Carefully examine these messages to troubleshoot and resolve issues. You can also enable detailed logging to aid debugging.

Q3: What are the best practices for managing state in Vultr Cloud Terraform deployments?

Store your Terraform state remotely using a backend like Terraform Cloud, AWS S3, or Azure Blob Storage. This ensures state consistency and protects against data loss.

Q4: Are there any limitations to using Vultr Cloud Terraform?

While Vultr Cloud Terraform offers extensive capabilities, some advanced features or specific Vultr services might have limited Terraform provider support. Always refer to the official provider documentation for the most up-to-date information.

Conclusion

Automating your Vultr cloud infrastructure with Vultr Cloud Terraform is a game-changer for DevOps engineers, developers, and system administrators. By implementing IaC, you achieve significant improvements in efficiency, consistency, and security. This guide has covered the fundamentals and advanced techniques for deploying and managing Vultr resources using Terraform. Remember to prioritize security best practices and explore the full potential of Terraform’s features for optimal results. Mastering Vultr Cloud Terraform will empower you to manage your cloud infrastructure with unparalleled speed and accuracy. Thank you for reading the DevopsRoles page!