Managing cloud infrastructure efficiently is paramount for any organization. The sheer scale and complexity of modern cloud deployments necessitate automation, and Terraform has emerged as a leading Infrastructure as Code (IaC) tool. This article delves into the intricacies of the Cloudflare Terraform provider, demonstrating how to automate the creation and management of your Cloudflare resources. We’ll explore various aspects of using this provider, from basic configurations to more advanced scenarios, addressing common challenges and providing best practices along the way. Mastering the Cloudflare Terraform provider significantly streamlines your workflow and ensures consistency across your Cloudflare deployments.

Understanding the Cloudflare Terraform Provider

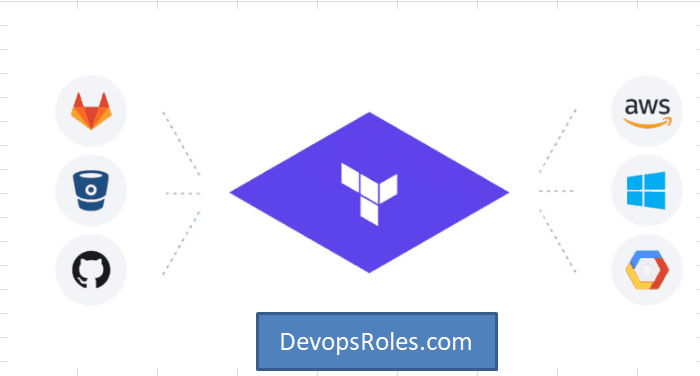

The Cloudflare Terraform provider acts as a bridge between Terraform and the Cloudflare API. It allows you to define your Cloudflare infrastructure as code, using Terraform’s declarative configuration language. This means you describe the desired state of your Cloudflare resources (e.g., zones, DNS records, firewall rules), and Terraform handles the creation, modification, and deletion of those resources automatically. This approach drastically reduces manual effort, minimizes errors, and promotes reproducibility. The provider offers a rich set of resources covering most aspects of the Cloudflare platform, enabling comprehensive infrastructure management.

Key Features of the Cloudflare Terraform Provider

- Declarative Configuration: Define your infrastructure using human-readable code.

- Version Control Integration: Track changes to your infrastructure configuration using Git or similar systems.

- Automation: Automate the entire lifecycle of your Cloudflare resources.

- Idempotency: Apply the same configuration multiple times without unintended side effects.

- Extensive Resource Coverage: Supports a wide range of Cloudflare resources, including DNS records, zones, firewall rules, and more.

Installing and Configuring the Cloudflare Terraform Provider

Before you can start using the Cloudflare Terraform provider, you need to install it. This usually involves adding it to your Terraform configuration file. The process involves specifying the provider’s source and configuring your Cloudflare API token.

Installation

The provider is installed by specifying it within your Terraform configuration file (typically main.tf). This usually looks like this:

terraform {

required_providers {

cloudflare = {

source = "cloudflare/cloudflare"

version = "~> 2.0"

}

}

}

provider "cloudflare" {

api_token = "YOUR_CLOUDFLARE_API_TOKEN"

}Replace "YOUR_CLOUDFLARE_API_TOKEN" with your actual Cloudflare API token. You can obtain this token from your Cloudflare account settings.

Authentication and API Token

The api_token attribute is crucial. Ensure its secrecy; avoid hardcoding it directly into your configuration. Consider using environment variables or a secrets management system for enhanced security. Incorrectly managing your API token can expose your Cloudflare account to unauthorized access.

Creating Cloudflare Resources with Terraform

Once the provider is configured, you can begin defining and managing Cloudflare resources. This section provides examples for some common resources.

Managing DNS Records

Creating and managing DNS records is a fundamental aspect of DNS management. The following example demonstrates adding an A record.

resource "cloudflare_dns_record" "example" {

zone_id = "YOUR_ZONE_ID"

name = "www"

type = "A"

content = "192.0.2.1"

ttl = 300

}

Remember to replace YOUR_ZONE_ID with your actual Cloudflare zone ID.

Working with Cloudflare Zones

Managing zones is equally important. While the Cloudflare Terraform provider doesn’t allow zone creation directly (as this implies domain ownership verification outside of Terraform’s scope), it enables configuration management of existing zones.

resource "cloudflare_zone" "example" {

zone_id = "YOUR_ZONE_ID"

paused = false #Example - change to toggle zone pause status.

# other settings as needed

}

Advanced Usage: Firewall Rules

Implementing complex firewall rules is another powerful use case. This example demonstrates the creation of a basic firewall rule.

resource "cloudflare_firewall_rule" "example" {

zone_id = "YOUR_ZONE_ID"

action = "block"

expression = "ip.src eq 192.0.2.1"

description = "Block traffic from 192.0.2.1"

}

This showcases the power and flexibility of the Cloudflare Terraform provider. Complex expressions and multiple rules can be implemented to manage your firewall robustly.

Utilizing the Cloudflare Terraform Provider: Best Practices

For effective and secure management of your infrastructure, adopt these best practices:

- Modularize your Terraform code: Break down large configurations into smaller, manageable modules.

- Version control your Terraform code: Use Git or a similar version control system to track changes and facilitate collaboration.

- Securely store your API token: Avoid hardcoding your API token directly into your Terraform files. Use environment variables or a secrets management solution instead.

- Use a state management system: Store your Terraform state in a remote backend (e.g., AWS S3, Azure Blob Storage) for collaboration and redundancy.

- Regularly test your Terraform configurations: Conduct thorough testing before deploying changes to your production environment. This includes using Terraform’s `plan` command to preview changes and the `apply` command for execution.

Frequently Asked Questions

What are the prerequisites for using the Cloudflare Terraform provider?

You need a Cloudflare account, a Cloudflare API token, and Terraform installed on your system. Familiarization with Terraform’s configuration language is highly beneficial.

How can I troubleshoot issues with the Cloudflare Terraform provider?

Refer to the official Cloudflare Terraform provider documentation for troubleshooting guides. The documentation often includes common errors and their solutions. Pay close attention to error messages as they provide valuable diagnostic information.

What is the best way to manage my Cloudflare API token for security?

Avoid hardcoding the API token directly into your Terraform files. Instead, use environment variables or a dedicated secrets management solution such as HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault. These solutions provide enhanced security and centralized management of sensitive information.

Can I use the Cloudflare Terraform Provider for other Cloudflare products?

The Cloudflare Terraform provider supports a wide range of Cloudflare services. Check the official documentation for the latest list of supported resources. New integrations are continually added.

How do I update the Cloudflare Terraform Provider to the latest version?

Updating the provider typically involves modifying the version constraint in your required_providers block in your Terraform configuration file. After updating the version, run `terraform init` to download the latest version of the provider.

Conclusion

The Cloudflare Terraform provider empowers you to automate the management of your Cloudflare infrastructure efficiently and reliably. By leveraging IaC principles, you can streamline your workflows, reduce errors, and ensure consistency in your deployments. Remember to prioritize security and follow the best practices outlined in this article to optimize your use of the Cloudflare Terraform provider. Mastering this tool is a significant step toward achieving a robust and scalable Cloudflare infrastructure.

For further details and the latest updates, refer to the official Cloudflare Terraform Provider documentation and the official Cloudflare documentation. Understanding and implementing these resources will further enhance your ability to manage your cloud infrastructure effectively.Thank you for reading the DevopsRoles page!