Table of Contents

- 1 Introduction

- 2 Understanding the Terraform State Lock Mechanism

- 3 Common Causes of the Terraform State Lock Error

- 4 Advanced Troubleshooting Techniques

- 4.1 Step 1: Identifying the Lock Holder

- 4.2 Step 2: Forcing Unlock of a Stale Lock

- 4.3 Step 3: Addressing Backend Configuration Issues

- 4.4 Step 4: Dealing with Network Connectivity Issues

- 4.5 Step 5: Preventing Future State Lock Errors

- 4.6 Step 6: Advanced Scenarios and Solutions

- 4.7 Frequently Asked Questions

- 5 Conclusion

Introduction

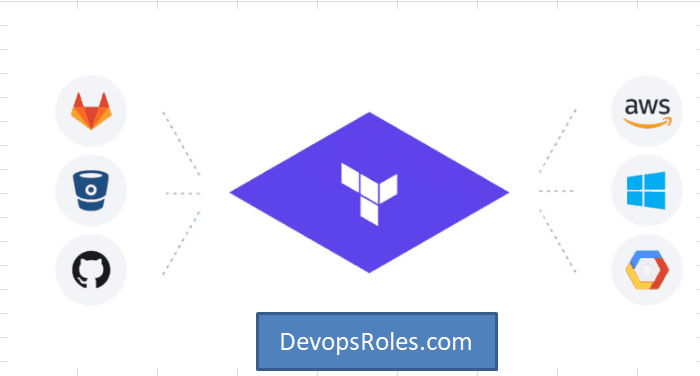

Terraform, a popular Infrastructure as Code (IaC) tool, helps automate the creation, management, and provisioning of infrastructure. However, one of the common issues that can disrupt your Terraform workflow is the Error Acquiring the State Lock. This error can cause significant delays, especially when dealing with large-scale infrastructure. In this deep guide, we’ll dive into the intricacies of Terraform state locks, explore advanced troubleshooting techniques, and discuss best practices to prevent this error in the future.

Understanding the Terraform State Lock Mechanism

What is Terraform State?

Before diving into state locks, it’s essential to understand what Terraform state is. Terraform state is a critical component that keeps track of the infrastructure managed by Terraform. It maps real-world resources to your configuration, ensuring that Terraform knows the current state of your infrastructure.

The Role of State Locking in Terraform

State locking is a mechanism used by Terraform to prevent concurrent operations from being performed on the same state file. When a Terraform operation is initiated, it acquires a lock on the state file to ensure no other process can modify it simultaneously. This lock ensures consistency and prevents potential conflicts or corruption in the state file.

How State Locking Works

When Terraform attempts to acquire a lock, it writes a lock file or a lock entry in the backend storage (e.g., AWS S3, GCS, or Consul). If another process tries to perform an operation while the state is locked, it will receive the “Error Acquiring the State Lock” message, indicating that the lock is currently held by another process.

Common Causes of the Terraform State Lock Error

Simultaneous Terraform Operations

One of the most straightforward causes of the state lock error is running multiple Terraform operations concurrently. When two or more processes try to acquire the lock simultaneously, only the first one will succeed, while the others will encounter the error.

Stale Locks

Stale locks occur when a previous Terraform operation fails or is interrupted before it can release the lock. This can happen due to network issues, abrupt termination of the Terraform process, or even bugs in the Terraform code.

Misconfigured Backend

Sometimes, the error might be caused by misconfigurations in the backend that stores the state file. This could include incorrect permissions, connectivity issues, or even exceeding resource quotas in cloud environments.

Backend Service Issues

Issues with the backend service itself, such as AWS S3, Google Cloud Storage, or HashiCorp Consul, can also lead to the state lock error. These issues might include service outages, throttling, or API rate limits.

Advanced Troubleshooting Techniques

Step 1: Identifying the Lock Holder

Check Lock Metadata

To troubleshoot the error effectively, it’s crucial to understand who or what is holding the lock. Most backend storage systems, like AWS S3 or GCS, allow you to view metadata associated with the lock. This metadata typically includes details like:

- Lock ID: A unique identifier for the lock.

- Creation Time: When the lock was created.

- Lock Holder: Information about the process or user that acquired the lock.

In AWS S3, you can find this metadata in the DynamoDB table used for state locking. For GCS, you can inspect the metadata directly in the GCS console.

Analyzing Lock Metadata

Once you have access to the lock metadata, analyze it to determine if the lock is stale or if another user or process is actively using it. If the lock is stale, you can proceed to force unlock it. If another process is holding the lock, you might need to wait until that process completes or coordinate with the user.

Step 2: Forcing Unlock of a Stale Lock

Force Unlock Command

Terraform provides a built-in command to forcefully unlock a state file:

terraform force-unlock <lock-id>Replace <lock-id> with the actual lock ID from the metadata. This command will remove the lock, allowing other processes to acquire it. Be cautious with this command, especially if you’re unsure whether the lock is still in use, as it could lead to state corruption.

Manual Unlocking

In rare cases, you might need to manually delete the lock entry from the backend. For AWS S3 with DynamoDB locking, you can delete the lock record from the DynamoDB table. For GCS, you might need to remove the lock file manually from the GCS bucket.

Step 3: Addressing Backend Configuration Issues

Verify Backend Configuration

Backend configuration issues are another common cause of the state lock error. Double-check your backend settings in the Terraform configuration files (backend.tf or in the terraform block) to ensure that everything is correctly configured.

For example, in AWS, ensure that:

- The S3 bucket exists and is accessible.

- The DynamoDB table for state locking is correctly configured and has the necessary permissions.

- Your AWS credentials are properly set up and have the required IAM policies.

In GCP, ensure that:

- The GCS bucket is correctly configured and accessible.

- The service account used by Terraform has the necessary permissions to read and write to the bucket.

Check Backend Service Status

Occasionally, the issue might not be with your configuration but with the backend service itself. Check the status of the service you’re using (e.g., AWS S3, Google Cloud Storage, or Consul) to ensure there are no ongoing outages or disruptions.

Step 4: Dealing with Network Connectivity Issues

Network Troubleshooting

Network connectivity issues between Terraform and the backend can also cause the state lock error. If you’re working in a cloud environment, ensure that your network configuration allows for communication between Terraform and the backend services.

Common network issues to check:

- Firewall Rules: Ensure that the necessary ports are open and that Terraform can reach the backend service.

- VPN Connections: If you’re using a VPN, verify that it’s not interfering with Terraform’s ability to connect to the backend.

- Proxy Settings: If you’re behind a proxy, ensure that Terraform is correctly configured to use it.

Retry Logic

Terraform has built-in retry logic for acquiring the state lock. If you suspect that the error is due to transient network issues, simply retrying the operation after a few minutes might resolve the issue.

Step 5: Preventing Future State Lock Errors

Implementing State Locking Best Practices

Use Remote Backends

One of the best ways to avoid state lock errors is to use a remote backend like AWS S3, Google Cloud Storage, or HashiCorp Consul. Remote backends ensure that the state file is centrally managed and reduce the risk of conflicts.

Use DynamoDB for Locking

If you’re using AWS S3 as your backend, consider implementing DynamoDB for state locking. DynamoDB provides a reliable and scalable way to manage state locks, ensuring that only one process can acquire the lock at a time.

Coordinate Terraform Runs

To prevent simultaneous access, implement a system where Terraform runs are coordinated. This could be through manual coordination, CI/CD pipelines, or using tools like Terraform Cloud or Terraform Enterprise, which provide features for managing and serializing Terraform operations.

Automation Tools and Lock Management

Terraform Enterprise and Terraform Cloud

Terraform Enterprise and Terraform Cloud offer advanced features for state management, including automated lock management. These tools can help you manage state locks more effectively and prevent issues caused by concurrent operations.

CI/CD Pipeline Integration

Integrating Terraform with your CI/CD pipeline can also help manage state locks. By automating Terraform runs and ensuring they are serialized, you can reduce the risk of encountering state lock errors.

Step 6: Advanced Scenarios and Solutions

Scenario 1: Lock Issues in a Multi-Region Setup

In a multi-region setup, state lock errors can occur if the state file is replicated across regions and not properly managed. To resolve this, ensure that your backend is correctly configured for multi-region support, and consider using a centralized locking mechanism like DynamoDB.

Scenario 2: Handling Large Scale Deployments

In large-scale deployments, state lock errors can become more frequent due to the higher volume of Terraform operations. To manage this, consider breaking down your infrastructure into smaller, modular components with separate state files. This reduces the likelihood of conflicts and makes it easier to manage state locks.

Frequently Asked Questions

What is the impact of a stale state lock on Terraform operations?

A stale state lock can prevent Terraform from performing any operations, effectively halting your infrastructure management. It’s crucial to resolve stale locks quickly to restore normal operations.

Can I automate the resolution of state lock errors?

Yes, by integrating Terraform with CI/CD pipelines or using Terraform Enterprise/Cloud, you can automate the management and resolution of state locks, reducing the need for manual intervention.

How do I avoid Terraform state lock errors in a team environment?

To avoid state lock errors in a team environment, use remote backends, implement locking mechanisms like DynamoDB, and coordinate Terraform runs to prevent simultaneous access.

What should I do if terraform force-unlock doesn’t resolve the issue?

If terraform force-unlock fails, you may need to manually remove the lock from the backend (e.g., delete the lock record from DynamoDB or the lock file from GCS). Ensure that no other processes are running before doing this to avoid state corruption.

Conclusion

The Error Acquiring the State Lock in Terraform is a common yet manageable issue. By understanding the underlying causes and implementing advanced troubleshooting techniques, you can effectively resolve this error and maintain a smooth Terraform workflow. This deep guide has provided you with the knowledge and tools to tackle state lock errors head-on, ensuring that your infrastructure management remains consistent and reliable. Thank you for reading the DevopsRoles page!

This comprehensive guide offers a deep dive into troubleshooting and resolving the Error Acquiring the State Lock in Terraform. By addressing both common and advanced scenarios, this article aims to equip Terraform users with the tools and knowledge needed to manage state locks effectively and ensure consistent infrastructure management.