Table of Contents

Introduction

How to create A Simple Docker Data Science Image.

- Docker offers an efficient way to establish a Python data science environment, ensuring a smooth workflow from development to deployment.

- Begin by creating a Dockerfile that precisely defines the environment’s specifications, dependencies, and configurations, serving as a blueprint for the Docker data science image.

- Use the Docker command to build the image by incorporating the Dockerfile along with your data science code and necessary requirements.

- Once the image is constructed, initiate a container to execute your analysis, maintaining environment consistency across various systems.

- Docker simplifies sharing your work by encapsulating the entire environment, eliminating compatibility issues that can arise from varied setups.

- For larger-scale collaboration or deployment needs, Docker Hub provides a platform to store and distribute your Docker images.

- Pushing your image to Docker Hub makes it readily available to colleagues and collaborators, allowing effortless integration into their workflows.

- This comprehensive process of setting up, building, sharing, and deploying a Python data science environment using Docker significantly enhances reproducibility, collaboration, and the efficiency of deployment.

Why Docker for Data Science?

Here are some reasons why Docker is commonly used in the data science field:

- Reproducibility: Docker allows you to package your entire data science environment, including dependencies, libraries, and configurations, into a single container. This ensures that your work can be reproduced exactly as you intended, even across different machines or platforms.

- Isolation: Docker containers provide a level of isolation, ensuring that the dependencies and libraries used in one project do not interfere with those used in another. This is especially important in data science, where different projects might require different versions of the same library.

- Portability: With Docker, you can package your entire data science stack into a container, making it easy to move your work between different environments, such as from your local machine to a cloud server. This is crucial for collaboration and deployment.

- Dependency Management: Managing dependencies in traditional environments can be challenging and error-prone. Docker simplifies this process by allowing you to specify dependencies in a Dockerfile, ensuring consistent and reliable setups.

- Version Control: Docker images can be versioned, allowing you to track changes to your environment over time. This can be especially helpful when sharing projects with collaborators or when you need to reproduce an older version of your work.

- Collaboration: Docker images can be easily shared with colleagues or the broader community. Instead of providing a list of instructions for setting up an environment, you can share a Docker image that anyone can run without worrying about setup complexities.

- Easy Setup: Docker simplifies the process of setting up complex environments. Once the Docker image is created, anyone can run it on their system with minimal effort, eliminating the need to manually install libraries and dependencies.

- Security: Docker containers provide a degree of isolation, which can enhance security by preventing unwanted interactions between your data science environment and your host system.

- Scalability: Docker containers can be orchestrated and managed using tools like Kubernetes, allowing you to scale your data science applications efficiently, whether you’re dealing with large datasets or resource-intensive computations.

- Consistency: Docker helps ensure that the environment you develop in is the same environment you’ll deploy to. This reduces the likelihood of “it works on my machine” issues.

Create A Simple Docker Data Science Image

Step 1: Create a Project Directory

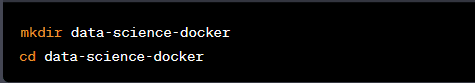

Create a new directory for your Docker project and navigate into it:

mkdir data-science-docker

cd data-science-docker

Step 2: Create a Dockerfile for Docker data science

Create a file named Dockerfile (without any file extensions) in the project directory. This file will contain instructions for building the Docker image. You can use any text editor you prefer.

# Use an official Python runtime as a parent image

FROM python:3.8-slim

# Set the working directory to /app

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Make port 8888 available to the world outside this container

EXPOSE 8888

# Define environment variable

ENV NAME DataScienceContainer

# Run jupyter notebook when the container launches

CMD ["jupyter", "notebook", "--ip=0.0.0.0", "--port=8888", "--no-browser", "--allow-root"]

Step 3: Create requirements.txt

Create a file named requirements.txt in the same directory and list the Python libraries you want to install. For this example, we’ll include pandas and numpy:

pandas==1.3.3

numpy==1.21.2

Step 4: Build the Docker Image

Open a terminal and navigate to the project directory (data-science-docker). Run the following command to build the Docker image:

docker build -t data-science-image .Step 5: Run the Docker Container

After the image is built, you can run a container based on it:

docker run -p 8888:8888 -v $(pwd):/app --name data-science-container data-science-imageHere:

- -p 8888:8888 maps port 8888 from the container to the host.

- -v $(pwd):/app mounts the current directory from the host to the /app directory in the container.

- –name data-science-container assigns a name to the running container.

In your terminal, you’ll see a URL with a token that you can copy and paste into your web browser to access the Jupyter Notebook interface. This will allow you to start working with data science libraries like NumPy and pandas.

Remember, this is a simple example. Depending on your specific requirements, you might need to add more configurations, libraries, or dependencies to your Docker image.

Step 6: Sharing and Deploying the Image

To save an image to a tar archive

docker save -o data-science-container.tar data-science-containerThis tarball can then be loaded on any other system with Docker installed via

docker load -i data-science-container.tarPush to Docker hub to share with others publicly or privately within an organization.

To push the image to Docker Hub:

- Create a Docker Hub account if you don’t already have one

- Log in to Docker Hub from the command line using docker login

- Tag the image with your Docker Hub username: docker tag data-science-container yourusername/data-science-container

- Push the image:

docker push yourusername/data-science-container - The data-science-container image is now hosted on Docker Hub. Other users can pull the image by running:

docker pull yourusername/data-science-containerConclusion

The process of creating a simple Docker data science image provides a powerful solution to some of the most pressing challenges in the field. By encapsulating the entire data science environment within a Docker container, practitioners can achieve reproducibility, ensuring that their work remains consistent across different systems and environments. The isolation and dependency management offered by Docker addresses the complexities of library versions, enhancing the stability of projects.

I hope will this your helpful. Thank you for reading the DevopsRoles page!