Prompt engineering, the art of crafting effective prompts for large language models (LLMs), is revolutionizing how we interact with AI. For tech professionals like DevOps engineers, cloud engineers, and database administrators, mastering prompt engineering unlocks significant potential for automation, enhanced efficiency, and problem-solving. This article compares nine leading prompt engineering tools, highlighting their strengths and weaknesses to help you choose the best fit for your needs.

Table of Contents

Why Prompt Engineering Matters for Tech Professionals

In today’s fast-paced tech landscape, automation and efficiency are paramount. Prompt engineering allows you to leverage the power of LLMs for a wide range of tasks, including:

- Automating code generation: Quickly generate code snippets, scripts, and configurations.

- Improving code quality: Use LLMs to identify bugs, suggest improvements, and refactor code.

- Streamlining documentation: Generate documentation automatically from code or other sources.

- Automating system administration tasks: Automate routine tasks like log analysis, system monitoring, and incident response.

- Enhancing security: Detect potential vulnerabilities in code and configurations.

- Improving collaboration: Facilitate communication and knowledge sharing among team members.

Choosing the right prompt engineering tool can significantly impact your productivity and the success of your projects.

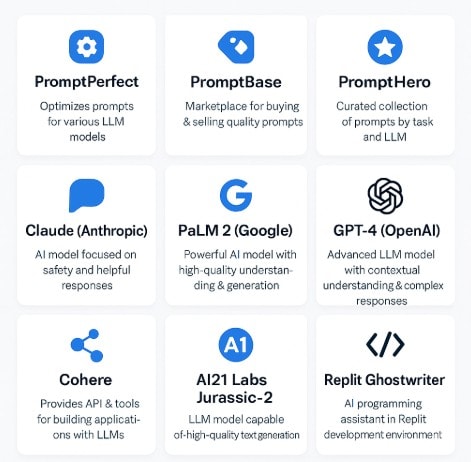

Comparing 9 Prompt Engineering Tools

The landscape of prompt engineering tools is constantly evolving. This comparison focuses on nine tools representing different approaches and capabilities. Note that the specific features and pricing may change over time. Always check the official websites for the latest information.

1. PromptPerfect

PromptPerfect focuses on optimizing prompts for various LLMs. It analyzes prompts, provides suggestions for improvement, and helps you iterate towards better results. It’s particularly useful for refining prompts for specific tasks, like code generation or data analysis.

2. PromptBase

PromptBase is a marketplace for buying and selling prompts. This is a great resource for finding pre-built, high-quality prompts that you can adapt to your specific needs. You can also sell your own prompts, creating a revenue stream.

3. PromptHero

Similar to PromptBase, PromptHero provides a curated collection of prompts categorized by task and LLM. It’s a user-friendly platform for discovering ready-made prompts and experimenting with different approaches.

4. Anthropic’s Claude

While not strictly a “prompt engineering tool,” Claude’s robust capabilities and helpfulness in response to complex prompts make it a valuable asset. Its focus on safety and helpfulness results in more reliable and predictable outputs compared to some other models.

5. Google’s PaLM 2

PaLM 2, powering many Google services, offers strong capabilities in prompt understanding and response generation. Its access through various Google Cloud services makes it readily available for integration into existing workflows.

6. OpenAI’s GPT-4

GPT-4, a leading LLM, offers powerful capabilities for prompt engineering, but requires careful prompt crafting to achieve optimal results. Its advanced understanding of context and nuance allows for complex interactions.

7. Cohere

Cohere provides APIs and tools for building applications with LLMs. While not a dedicated prompt engineering tool, its comprehensive platform facilitates experimentation and iterative prompt refinement.

8. AI21 Labs Jurassic-2

Jurassic-2 offers a powerful LLM with strong performance across various tasks. Like other LLMs, effective prompt engineering is crucial to unlock its full potential. Its APIs make it easily integrable into custom applications.

9. Replit Ghostwriter

Replit Ghostwriter integrates directly into the Replit coding environment, offering on-the-fly code generation and assistance based on prompts. This tightly integrated approach streamlines the workflow for developers.

Use Cases and Examples

Automating Code Generation

Let’s say you need to generate a Python script to parse a CSV file. Instead of writing the script from scratch, you could use a prompt engineering tool like PromptPerfect to refine your prompt, ensuring the LLM generates the correct code. For example:

Poor Prompt: “Write a Python script.”

Improved Prompt (using PromptPerfect): “Write a Python script to parse a CSV file named ‘data.csv’, extract the ‘Name’ and ‘Age’ columns, and print the results to the console. Handle potential errors gracefully.”

Improving Code Quality

You can use LLMs to improve existing code. Provide a code snippet as a prompt and ask the LLM to identify potential bugs or suggest improvements. For example, you could ask: “Analyze this code snippet and suggest improvements for readability and efficiency: [Insert your code here]“

Automating System Administration Tasks

Prompt engineering can automate tasks like log analysis. You could feed log files to an LLM and prompt it to identify errors or security issues. For example: “Analyze this log file [path/to/logfile] and identify any suspicious activity or errors related to database access.”

Frequently Asked Questions (FAQ)

Q1: What are the key differences between the various prompt engineering tools?

The main differences lie in their focus, features, and pricing models. Some, like PromptBase and PromptHero, are marketplaces for prompts. Others, like PromptPerfect, focus on optimizing prompts. LLMs like GPT-4 and PaLM 2 provide powerful underlying models, but require more hands-on prompt engineering. Tools like Replit Ghostwriter integrate directly into development environments.

Q2: How do I choose the right prompt engineering tool for my needs?

Consider your specific requirements. If you need pre-built prompts, a marketplace like PromptBase or PromptHero might be suitable. If you need to optimize existing prompts, PromptPerfect could be a good choice. If you need a powerful LLM for various tasks, consider GPT-4, PaLM 2, or Claude. For integrated development, Replit Ghostwriter is a strong option.

Q3: Are there any ethical considerations when using prompt engineering tools?

Yes, it’s crucial to be mindful of ethical implications. Avoid using LLMs to generate biased or harmful content. Ensure the data used to train the models and the prompts you create are ethically sound. Always review the outputs carefully before deploying them in production systems.

Q4: What are the costs associated with using these tools?

Costs vary significantly. Some tools offer free plans with limitations, while others have subscription-based pricing models. The cost of using LLMs depends on usage and the provider’s pricing structure. It’s essential to review the pricing details on each tool’s website.

Conclusion

Prompt engineering is a powerful technique that can dramatically improve the efficiency and effectiveness of tech professionals. By carefully selecting the right tool and mastering the art of crafting effective prompts, you can unlock the potential of LLMs to automate tasks, improve code quality, and enhance security. Remember to experiment with different tools and approaches to find what works best for your specific needs and always prioritize ethical considerations.

This comparison of nine prompt engineering tools provides a solid starting point for your journey. Remember to stay updated on the latest developments in this rapidly evolving field. Why Bottlerocket and Terraform for EKS. Thank you for reading the DevopsRoles page!