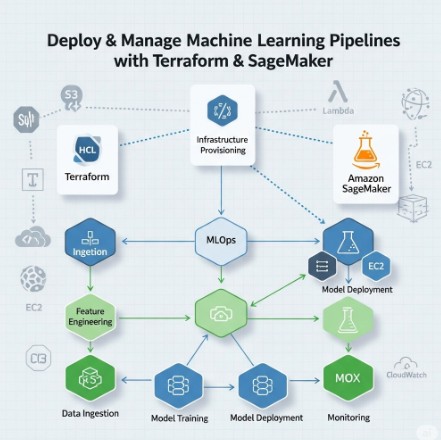

Deploying and managing machine learning (ML) pipelines efficiently and reliably is a critical challenge for organizations aiming to leverage the power of AI. The complexity of managing infrastructure, dependencies, and the iterative nature of ML model development often leads to operational bottlenecks. This article focuses on streamlining this process using Machine Learning Pipelines Terraform and Amazon SageMaker, providing a robust and scalable solution for deploying and managing your ML workflows.

Table of Contents

- 1 Understanding the Need for Infrastructure as Code (IaC) in ML Pipelines

- 2 Leveraging Terraform for Infrastructure Management

- 3 Building and Deploying Machine Learning Pipelines with SageMaker

- 4 Managing Machine Learning Pipelines Terraform for Scalability and Maintainability

- 5 Frequently Asked Questions

- 5.1 Q1: What are the benefits of using Terraform for managing SageMaker pipelines?

- 5.2 Q2: How do I handle secrets management when using Terraform for SageMaker?

- 5.3 Q3: Can I use Terraform to manage custom containers in SageMaker?

- 5.4 Q4: How do I handle updates and changes to my ML pipeline infrastructure?

- 6 Conclusion

Understanding the Need for Infrastructure as Code (IaC) in ML Pipelines

Traditional methods of deploying ML pipelines often involve manual configuration and provisioning of infrastructure, leading to inconsistencies, errors, and difficulty in reproducibility. Infrastructure as Code (IaC), using tools like Terraform, offers a solution by automating the provisioning and management of infrastructure resources. By defining infrastructure in code, you gain version control, improved consistency, and the ability to easily replicate environments across different cloud providers or on-premises setups. This is particularly crucial for Machine Learning Pipelines Terraform deployments, where the infrastructure needs can fluctuate depending on the complexity of the pipeline and the volume of data being processed.

Leveraging Terraform for Infrastructure Management

Terraform, a popular IaC tool, allows you to define and manage your infrastructure using a declarative configuration language called HashiCorp Configuration Language (HCL). This allows you to define the desired state of your infrastructure, and Terraform will manage the creation, modification, and deletion of resources to achieve that state. For Machine Learning Pipelines Terraform deployments, this means you can define all the necessary components, such as:

- Amazon SageMaker instances (e.g., training instances, processing instances, endpoint instances).

- Amazon S3 buckets for storing data and model artifacts.

- IAM roles and policies to manage access control.

- Amazon EC2 instances for custom components (if needed).

- Networking resources such as VPCs, subnets, and security groups.

Example Terraform Configuration for SageMaker Instance

The following code snippet shows a basic example of creating a SageMaker training instance using Terraform:

resource "aws_sagemaker_notebook_instance" "training" {

name = "my-sagemaker-training-instance"

instance_type = "ml.m5.xlarge"

role_arn = aws_iam_role.sagemaker_role.arn

}

resource "aws_iam_role" "sagemaker_role" {

name = "SageMakerTrainingRole"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "sagemaker.amazonaws.com"

}

}

]

})

}This example demonstrates how to define a SageMaker notebook instance with a specific instance type and an associated IAM role. The full configuration would also include the necessary S3 buckets, VPC settings, and security configurations. More complex pipelines might require additional resources and configurations.

Building and Deploying Machine Learning Pipelines with SageMaker

Amazon SageMaker provides a managed service for building, training, and deploying ML models. By integrating SageMaker with Terraform, you can automate the entire process, from infrastructure provisioning to model deployment. SageMaker supports various pipeline components, including:

- Processing jobs for data preprocessing and feature engineering.

- Training jobs for model training.

- Model building and evaluation.

- Model deployment and endpoint creation.

Integrating SageMaker Pipelines with Terraform

You can manage SageMaker pipelines using Terraform by utilizing the AWS provider’s resources related to SageMaker pipelines and other supporting services. This includes defining the pipeline steps, dependencies, and the associated compute resources.

Remember to define IAM roles with appropriate permissions to allow Terraform to interact with SageMaker and other AWS services.

Managing Machine Learning Pipelines Terraform for Scalability and Maintainability

One of the key advantages of using Machine Learning Pipelines Terraform is the improved scalability and maintainability of your ML infrastructure. By leveraging Terraform’s capabilities, you can easily scale your infrastructure up or down based on your needs, ensuring optimal resource utilization. Furthermore, version control for your Terraform configuration provides a history of changes, allowing you to easily revert to previous states if necessary. This facilitates collaboration amongst team members working on the ML pipeline.

Monitoring and Logging

Comprehensive monitoring and logging are crucial for maintaining a robust ML pipeline. Integrate monitoring tools such as CloudWatch to track the performance of your SageMaker instances, pipelines, and other infrastructure components. This allows you to identify and address issues proactively.

Frequently Asked Questions

Q1: What are the benefits of using Terraform for managing SageMaker pipelines?

Using Terraform for managing SageMaker pipelines offers several advantages: Infrastructure as Code (IaC) enables automation, reproducibility, version control, and improved scalability and maintainability. It simplifies the complex task of managing the infrastructure required for machine learning workflows.

Q2: How do I handle secrets management when using Terraform for SageMaker?

For secure management of secrets, such as AWS access keys, use tools like AWS Secrets Manager or HashiCorp Vault. These tools allow you to securely store and retrieve secrets without hardcoding them in your Terraform configuration files. Integrate these secret management solutions into your Terraform workflow to access sensitive information safely.

Q3: Can I use Terraform to manage custom containers in SageMaker?

Yes, you can use Terraform to manage custom containers in SageMaker. You would define the necessary ECR repositories to store your custom container images and then reference them in your SageMaker training or deployment configurations managed by Terraform. This allows you to integrate your custom algorithms and dependencies seamlessly into your automated pipeline.

Q4: How do I handle updates and changes to my ML pipeline infrastructure?

Use Terraform’s `plan` and `apply` commands to preview and apply changes to your infrastructure. Terraform’s state management ensures that only necessary changes are applied, minimizing disruptions. Version control your Terraform code to track changes and easily revert if needed. Remember to test changes thoroughly in a non-production environment before deploying to production.

Conclusion

Deploying and managing Machine Learning Pipelines Terraform and SageMaker provides a powerful and efficient approach to building and deploying scalable ML workflows. By leveraging IaC principles and the capabilities of Terraform, organizations can overcome the challenges of managing complex infrastructure and ensure the reproducibility and reliability of their ML pipelines. Remember to prioritize security best practices, including robust IAM roles and secret management, when implementing this solution. Consistent use of Machine Learning Pipelines Terraform ensures efficient and reliable ML operations. Thank you for reading the DevopsRoles page!

For further information, refer to the official Terraform and AWS SageMaker documentation:

Terraform Documentation

AWS SageMaker Documentation

AWS Provider for Terraform