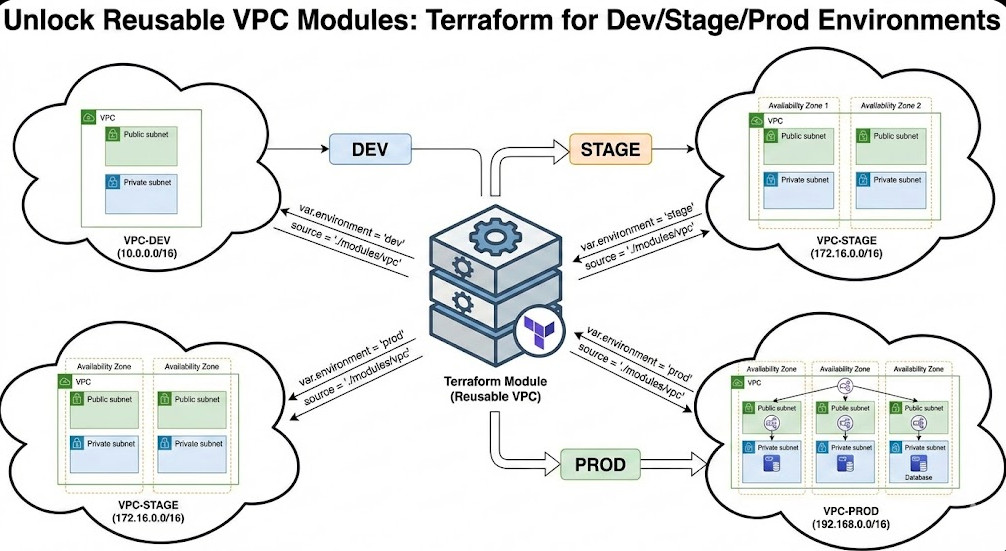

If you are managing infrastructure at scale, you have likely felt the pain of the “copy-paste” sprawl. You define a VPC for Development, then copy the code for Staging, and again for Production, perhaps changing a CIDR block or an instance count manually. This breaks the fundamental DevOps principle of DRY (Don’t Repeat Yourself) and introduces drift risk.

For Senior DevOps Engineers and SREs, the goal isn’t just to write code that works; it’s to architect abstractions that scale. Reusable VPC Modules are the cornerstone of a mature Infrastructure as Code (IaC) strategy. They allow you to define the “Gold Standard” for networking once and instantiate it infinitely across environments with predictable results.

In this guide, we will move beyond basic syntax. We will construct a production-grade, agnostic VPC module capable of dynamic subnet calculation, conditional resource creation (like NAT Gateways), and strict variable validation suitable for high-compliance Dev, Stage, and Prod environments.

Table of Contents

Why Reusable VPC Modules Matter (Beyond DRY)

While reducing code duplication is the obvious benefit, the strategic value of modularizing your VPC architecture runs deeper.

- Governance & Compliance: By centralizing your network logic, you enforce security standards (e.g., “Flow Logs must always be enabled” or “Private subnets must not have public IP assignment”) in a single location.

- Testing & Versioning: You can version your module (e.g.,

v1.2.0). Production can remain pinned to a stable version while you iterate on features in Development, effectively applying software engineering lifecycles to your network. - Abstraction Complexity: A consumer of your module (perhaps a developer spinning up an ephemeral environment) shouldn’t need to understand Route Tables or NACLs. They should only need to provide a CIDR block and an Environment name.

Pro-Tip: Avoid the “God Module” anti-pattern. While it’s tempting to bundle the VPC, EKS, and RDS into one giant module, this leads to dependency hell. Keep your Reusable VPC Modules strictly focused on networking primitives: VPC, Subnets, Route Tables, Gateways, and ACLs.

Anatomy of a Production-Grade Module

Let’s build a module that calculates subnets dynamically based on Availability Zones (AZs) and handles environment-specific logic (like high availability in Prod vs. cost savings in Dev).

1. Input Strategy & Validation

Modern Terraform (v1.0+) allows for powerful variable validation. We want to ensure that downstream users don’t accidentally pass invalid CIDR blocks.

# modules/vpc/variables.tf

variable "environment" {

description = "Deployment environment (dev, stage, prod)"

type = string

validation {

condition = contains(["dev", "stage", "prod"], var.environment)

error_message = "Environment must be one of: dev, stage, prod."

}

}

variable "vpc_cidr" {

description = "CIDR block for the VPC"

type = string

validation {

condition = can(cidrhost(var.vpc_cidr, 0))

error_message = "Must be a valid IPv4 CIDR block."

}

}

variable "az_count" {

description = "Number of AZs to utilize"

type = number

default = 2

}2. Dynamic Subnetting with cidrsubnet

Hardcoding subnet CIDRs (e.g., 10.0.1.0/24) is brittle. Instead, use the cidrsubnet function to mathematically carve up the VPC CIDR. This ensures no overlap and automatic scalability if you change the base CIDR size.

# modules/vpc/main.tf

data "aws_availability_zones" "available" {

state = "available"

}

resource "aws_vpc" "main" {

cidr_block = var.vpc_cidr

enable_dns_hostnames = true

enable_dns_support = true

tags = {

Name = "${var.environment}-vpc"

}

}

# Public Subnets

resource "aws_subnet" "public" {

count = var.az_count

vpc_id = aws_vpc.main.id

# Example: 10.0.0.0/16 -> 10.0.1.0/24, 10.0.2.0/24, etc.

cidr_block = cidrsubnet(var.vpc_cidr, 8, count.index)

availability_zone = data.aws_availability_zones.available.names[count.index]

map_public_ip_on_launch = true

tags = {

Name = "${var.environment}-public-${data.aws_availability_zones.available.names[count.index]}"

Tier = "Public"

}

}

# Private Subnets

resource "aws_subnet" "private" {

count = var.az_count

vpc_id = aws_vpc.main.id

# Offset the CIDR calculation by 'az_count' to avoid overlap with public subnets

cidr_block = cidrsubnet(var.vpc_cidr, 8, count.index + var.az_count)

availability_zone = data.aws_availability_zones.available.names[count.index]

tags = {

Name = "${var.environment}-private-${data.aws_availability_zones.available.names[count.index]}"

Tier = "Private"

}

}3. Conditional NAT Gateways (Cost Optimization)

NAT Gateways are expensive. In a Dev environment, you might only need one shared NAT Gateway (or none if you use instances with public IPs for testing), whereas Prod requires High Availability (one NAT per AZ).

# modules/vpc/main.tf

locals {

# If Prod, create NAT per AZ. If Dev/Stage, create only 1 NAT total to save costs.

nat_gateway_count = var.environment == "prod" ? var.az_count : 1

}

resource "aws_eip" "nat" {

count = local.nat_gateway_count

domain = "vpc"

}

resource "aws_nat_gateway" "main" {

count = local.nat_gateway_count

allocation_id = aws_eip.nat[count.index].id

subnet_id = aws_subnet.public[count.index].id

tags = {

Name = "${var.environment}-nat-${count.index}"

}

}Implementing Across Environments

Once your Reusable VPC Module is polished, utilizing it across environments becomes a trivial exercise in configuration management. I recommend a directory-based structure over Terraform Workspaces for clearer isolation of state files and variable definitions.

Directory Structure

infrastructure/ ├── modules/ │ └── vpc/ (The code we wrote above) ├── environments/ │ ├── dev/ │ │ └── main.tf │ ├── stage/ │ │ └── main.tf │ └── prod/ │ └── main.tf

The Implementation (DRY at work)

In environments/prod/main.tf, your code is now incredibly concise:

module "vpc" {

source = "../../modules/vpc"

environment = "prod"

vpc_cidr = "10.0.0.0/16"

az_count = 3 # High Availability

}

Contrast this with environments/dev/main.tf:

module "vpc" {

source = "../../modules/vpc"

environment = "dev"

vpc_cidr = "10.10.0.0/16" # Different CIDR

az_count = 2 # Lower cost

}Advanced Patterns & Considerations

Tagging Standards

Effective tagging is non-negotiable for cost allocation and resource tracking. Use the default_tags feature in the AWS provider configuration to apply global tags, but ensure your module accepts a tags map variable to merge specific metadata.

Outputting Values for Dependency Injection

Your VPC module is likely the foundation for other modules (like EKS or RDS). Ensure you output the IDs required by these dependent resources.

# modules/vpc/outputs.tf

output "vpc_id" {

value = aws_vpc.main.id

}

output "private_subnet_ids" {

value = aws_subnet.private[*].id

}

output "public_subnet_ids" {

value = aws_subnet.public[*].id

}Frequently Asked Questions (FAQ)

Should I use the official terraform-aws-modules/vpc/aws or build my own?

For beginners or rapid prototyping, the community module is excellent. However, for Expert SRE teams, building your own Reusable VPC Module is often preferred. It reduces “bloat” (unused features from the community module) and allows strict adherence to internal naming conventions and security compliance logic that a generic module cannot provide.

How do I handle VPC Peering between these environments?

Generally, you should avoid peering Dev and Prod. However, if you need shared services (like a tooling VPC), create a separate vpc-peering module. Do not bake peering logic into the core VPC module, as it creates circular dependencies and makes the module difficult to destroy.

What about VPC Flow Logs?

Flow Logs should be a standard part of your reusable module. I recommend adding a variable enable_flow_logs (defaulting to true) and storing logs in S3 or CloudWatch Logs. This ensures that every environment spun up with your module has auditing enabled by default.

Conclusion

Transitioning to Reusable VPC Modules transforms your infrastructure from a collection of static scripts into a dynamic, versioned product. By abstracting the complexity of subnet math and resource allocation, you empower your team to deploy Dev, Stage, and Prod environments that are consistent, compliant, and cost-optimized.

Start refactoring your hardcoded network configurations today. Isolate your logic into a module, version it, and watch your drift disappear. Thank you for reading the DevopsRoles page!