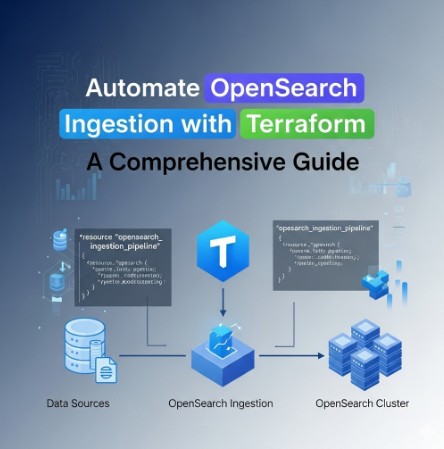

Managing the ingestion pipeline for OpenSearch can be a complex and time-consuming task. Manually configuring and maintaining this infrastructure is prone to errors and inconsistencies. This article addresses this challenge by providing a detailed guide on how to leverage Terraform to automate OpenSearch ingestion, significantly improving efficiency and reducing the risk of human error. We will explore how OpenSearch Ingestion Terraform simplifies the deployment and management of your data ingestion infrastructure.

Table of Contents

- 1 Understanding the Need for Automation in OpenSearch Ingestion

- 2 Setting up Your OpenSearch Environment with Terraform

- 3 OpenSearch Ingestion Terraform: Automating the Pipeline

- 4 Advanced Techniques for OpenSearch Ingestion Terraform

- 5 Frequently Asked Questions

- 5.1 Q1: How do I handle sensitive information like passwords in my Terraform configuration?

- 5.2 Q2: What are the benefits of using Terraform for OpenSearch Ingestion?

- 5.3 Q3: Can I use Terraform to manage multiple OpenSearch clusters and ingestion pipelines?

- 5.4 Q4: How do I troubleshoot issues with my OpenSearch Ingestion Terraform configuration?

- 6 Conclusion

Understanding the Need for Automation in OpenSearch Ingestion

OpenSearch, a powerful open-source search and analytics suite, relies heavily on efficient data ingestion. The process of getting data into OpenSearch involves several steps, including data extraction, transformation, and loading (ETL). Manually managing these steps across multiple environments (development, staging, production) can quickly become unmanageable, especially as the volume and complexity of data grow. This is where infrastructure-as-code (IaC) tools like Terraform come in. Using Terraform for OpenSearch Ingestion allows for consistent, repeatable, and automated deployments, reducing operational overhead and improving overall reliability.

Setting up Your OpenSearch Environment with Terraform

Before we delve into automating the ingestion pipeline, it’s crucial to have a functional OpenSearch cluster deployed using Terraform. This involves defining the cluster’s resources, including nodes, domains, and security groups. The following code snippet shows a basic example of creating an OpenSearch domain using the official AWS provider for Terraform:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.0"

}

}

}

provider "aws" {

region = "us-west-2"

}

resource "aws_opensearchservice_domain" "example" {

domain_name = "my-opensearch-domain"

engine_version = "2.4"

cluster_config {

instance_type = "t3.medium.elasticsearch"

instance_count = 3

}

access_policies = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "es:*",

"Resource": "arn:aws:es:us-west-2:123456789012:domain/my-opensearch-domain/*"

}

]

}

EOF

}This is a simplified example. You’ll need to adjust it based on your specific requirements, including choosing the appropriate instance type, number of nodes, and security configurations. Remember to consult the official AWS Terraform provider documentation for the most up-to-date information and options.

OpenSearch Ingestion Terraform: Automating the Pipeline

With your OpenSearch cluster successfully deployed, we can now focus on automating the ingestion pipeline using Terraform. This typically involves configuring and managing components such as Apache Kafka, Logstash, and potentially other ETL tools. The approach depends on your chosen ingestion method. For this example, let’s consider using Logstash to ingest data from a local file and forward it to OpenSearch.

Configuring Logstash with Terraform

We can use the null_resource to execute Logstash configuration commands. This allows us to manage Logstash configurations as part of our infrastructure definition. This approach requires ensuring that Logstash is already installed and accessible on the machine where Terraform is running or on a dedicated Logstash server managed through Terraform.

resource "null_resource" "logstash_config" {

provisioner "local-exec" {

command = "echo '${file("./logstash_config.conf")}' | sudo tee /etc/logstash/conf.d/myconfig.conf"

}

depends_on = [

aws_opensearchservice_domain.example

]

}The ./logstash_config.conf file would contain the actual Logstash configuration. An example configuration to read data from a file named my_data.json and index it into OpenSearch would be:

input {

file {

path => "/path/to/my_data.json"

start_position => "beginning"

}

}

filter {

json {

source => "message"

}

}

output {

opensearch {

hosts => ["${aws_opensearchservice_domain.example.endpoint}"]

index => "my-index"

user => "admin"

password => "${aws_opensearchservice_domain.example.master_user_password}"

}

}Managing Dependencies

It’s crucial to define dependencies correctly within your Terraform configuration. In the example above, the null_resource depends on the OpenSearch domain being created. This ensures that Logstash attempts to connect to the OpenSearch cluster only after it’s fully operational. Failing to manage dependencies correctly can lead to errors during deployment.

Advanced Techniques for OpenSearch Ingestion Terraform

For more complex scenarios, you might need to leverage more sophisticated techniques:

- Using a dedicated Logstash instance: Instead of running Logstash on the machine executing Terraform, manage a dedicated Logstash instance using Terraform, providing better scalability and isolation.

- Integrating with other ETL tools: Extend your pipeline to include other ETL tools like Apache Kafka or Apache Flume, managing their configurations and deployments using Terraform.

- Implementing security best practices: Use IAM roles to restrict access to OpenSearch, encrypt data in transit and at rest, and follow other security measures to protect your data.

- Using a CI/CD pipeline: Integrate your Terraform code into a CI/CD pipeline for automated testing and deployment.

Frequently Asked Questions

Q1: How do I handle sensitive information like passwords in my Terraform configuration?

Avoid hardcoding sensitive information directly in your Terraform configuration. Use environment variables or dedicated secrets management solutions like AWS Secrets Manager or HashiCorp Vault to store and securely access sensitive data.

Q2: What are the benefits of using Terraform for OpenSearch Ingestion?

Terraform provides several benefits, including improved infrastructure-as-code practices, automation of deployments, version control of infrastructure configurations, and enhanced collaboration among team members.

Q3: Can I use Terraform to manage multiple OpenSearch clusters and ingestion pipelines?

Yes, Terraform’s modular design allows you to define and manage multiple clusters and pipelines with ease. You can create modules to reuse configurations and improve maintainability.

Q4: How do I troubleshoot issues with my OpenSearch Ingestion Terraform configuration?

Carefully review the Terraform output for errors and warnings. Examine the logs from Logstash and OpenSearch to identify issues. Using a debugger can assist in pinpointing the problems.

Conclusion

Automating OpenSearch ingestion with Terraform offers a significant improvement in efficiency and reliability compared to manual configurations. By leveraging infrastructure-as-code principles, you gain better control, reproducibility, and scalability for your data ingestion pipeline. Mastering OpenSearch Ingestion Terraform is a crucial step towards building a robust and scalable data infrastructure. Remember to prioritize security and utilize best practices throughout the process. Always consult the official documentation for the latest updates and features. Thank you for reading the DevopsRoles page!